Zeitschrift für Pädagogische Psychologie / German Journal of Educational Psychology, 8 (2), 1994, 89-97

Siu L. CHOW

The Experimenter's Expectancy Effect: A Meta-experiment

Der Erwartungseffekt beim Versuchsleiter: Ein Meta-experiment

Zusammenfassung:

Die Behauptung, ein experimentelles Ergebnis würde dutch die Erwartung des Experimentators bestimmt, wurde empirisch mit einem Meta-Experiment überprüft. Drei Experimentalgruppen führten das Foto-Rating-experiment von Rosenthal & Fode (1963a) unter zwei Bedingungen (unterschiedliche Informationen über erwartete Ergebnisse) durch. Es ergaben sich keine Hinweise auf Experimentator-Erwartungs-Effekte (verstanden als Differenz zwischen beiden Bedingungen). Einige Probleme des Experiments von Rosenthal & Fode, die sich hinsichtlich der Evidenz dieses Effektes ergeben, werden untersucht. In bezug auf die unkritische Annahme eines Experimentator-Erwartungs-Effektes werden einige metatheoretische und pädagogische Fragen diskutiert.Summary: The claim that the outcome of an experiment may be determined by what its experimenter expects to obtain was empirically assessed with a meta-experiment. Three groups of experimenters were asked to conduct Rosenthal & Fode's (1963a) photorating task under two conditions which jointly satisfied the formal requirement of an experiment. The three groups of experimenters were given different information about the expected outcome. There was no evidence of experimenter's expectancy effect when it was properly defined in terms of the difference between the two conditions. Some issues raised by Rosenthal & Fode's (1963a) study, in its capacity as evidence for the experimenter's expectancy effect in particular, are examined. Also discussed are a few metatheoretical issues, as well as some pedagogical implications, of accepting uncritically experimenter's expectancy effect.

1. Introduction

Psychology students are introduced to various research methods (viz., non-experimental, experimental and quasi-experimental). Moreover, they are exhorted to be methodologically, as w

ell as conceptually, rigorous and to use well-defined terminology in a consistent and precise manner. It is thus hoped that students may become competent in separating valid from invalid research conclusions based on empirical studies. Included in almost all methodology textbooks is a discussion of some probable pitfalls confronting an experimenter, one of which is experimenter effect. This is the claim that the result obtained in an experiment depends on who conducts the experiment. It is further claimed that this state of affairs may be brought about by an experimenter's expectancy regarding the experimental outcome, a phenomenon known as experimenter's expectancy effect (called EEE in subsequent discussion). More specifically, the central thesis of EEE is that experimenters obtain the data they expect to obtain.The EEE claim was made on the basis of Rosenthal & Fode's (1963a,b) studies. As both of these studies have the same design, Rosenthal & Fode's (1963a) photo-rating study will be used to show why the results of these studies do not warrant the EEE claim. Three assumptions underlie the putative EEE. (1) Experimenters behave in a way consistent with their experimental hypotheses for various extra-research reasons (e.g., the experimenter's vested interest, prestige, personal pride, etc.). (2) Subjects in an experiment endeavor to behave in a way consistent with their experimenter's wish. (3) Neither experimenters nor subjects are aware of the processes envisaged in assumptions 1 and 2 (Rosenthal 1976; 1969; Rosenthal & Rosnow 1969; 1975).

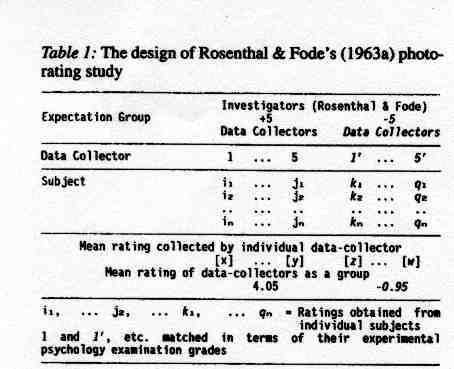

The design of Rosenthal & Fode's (1963a) photo-rating study has been schematically represented in Table 1. Two groups of undergraduate students (called student data-collector in subsequent discussion) were asked to replicate some "well-established" experimental findings. These student data-collectors showed participants in the investigation (called photo-raters subsequently) a series of photographs of single faces with a "neutral" expression (i.e., the photos "were rated on the average as neither successes nor failures" by independent raters before their investigation; Rosenthal & Fode 1963a, 494).

The photo-rating task was presented by student data-collectors to photo-raters in the context of "developing a test of empathy" (Rosenthal & Fode 1963a, 507). The photo-raters were asked to judge whether or not the face shown on a trial belonged to someone who had recently experienced a success or a failure. The photo-raters indicated their responses on a 21-point scale (from -10 to +10). A negative number represented failure (-10 indicated "extreme failure"), a positive number represented success (+10 represented "extreme success").

The two groups of student data-collectors were independently led to believe that previous researchers had obtained different results. More specifically, students in the "+5" and "-5" groups were separately told that previous average ratings were +5 and -5 respectively. As may be seen from Table 1, the mean ratings obtained by Rosenthal & Fode's (1963a) data collectors in the "+5" and "-5" groups were 4.05 and -0.95, respectively. This is the empirical evidence for the EEE claim.

I.1. Investigator, Experimenter

and Data CollectorIt is impressed upon all students of psychology by research methods textbooks that a control condition MUST be present in an experiment. More specifically, the minimal requirement of an experiment is that data be collected in at least two conditions which are identical in all aspects, but one. This formal requirement is, in fact, an exemplification of one of Mill's (1973) methods of scientific enquiry, namely, method of differences (Boring 1954; Cohen & Nagel 1934). Satisfying the formal stipulation of method of difference makes it possible to unambiguously exclude at least one alternative explanation of the data (Chow 1987c; Cohen & Nagel 1934). As may be seen from Table 1, Rosenthal & Fode (1963a) themselves collected data under two conditions (viz., the "+5" and the "-5" conditions). The two conditions were made comparable in a crucial aspect by matching the data-collectors in terms of their experimental psychology examination grades. Other extraneous variables were made comparable between the two groups of student data-collectors by assigning the students randomly to the "+5" and "-5" groups. Hence, Rosenthal & Fode (1963a) themselves conducted an experiment. However, what they did was not sufficient for their purpose, namely, to provide empirical evidence for EEE.

Although Rosenthal & Fode (1963a) were experimenters vis-à-vis their student data-collectors, their student data-collectors themselves were not experimenters vis-à-vis the photo-raters. Hence, it is important to identify Rosenthal & Fode as investigators vis-à-vis the photo-raters (Barber & Silver 1968a,b). As investigators, Rosenthal & Fode (1963a) wished to show experimentally that experimenters who were led to expect different experimental outcomes would obtain different experimental results. Given such an objective, Rosenthal & Fode (1963a) were obliged to give their student data-collectors an experiment to conduct. Yet, their students (qua data-collectors) collected photo-rating responses under only one condition, in violation of the formal requirement of method of difference (see Boring 1954). In other words, Rosenthal & Fode's (1963a) data-collectors did not conduct an experiment when they collected the photo-rating data. The photo-raters merely engaged in a measurement exercise (Stevens 1968). It is, hence, misleading to characterize Rosenthal & Fode's (1963a,b) data-collectors as experimenters because the student data-collectors did not conduct an experiment.

The difference between the mean ratings collected by the "+5" and the "-5" groups of Rosenthal & Fode's (1963a) data-collectors (viz., the difference between 4.05 and -0.95 in Table 1) means only that data-collectors given different information may obtain different results when given a measurement exercise. However, the said difference does not warrant the conclusion that experimenters expecting different experimental outcomes would obtain different experimental results because what is true of a measurement exercise need not be true of an experiment.

1.2.

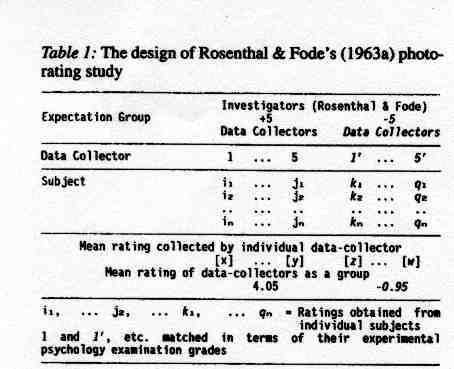

Meta-experiment and ExperimentIn other words, every data-collector must be given an experiment to conduct before any conclusion about the putative EEE can be drawn. Hence, in order to study EEE experimentally, an investigator must conduct an experiment at a higher level of abstraction. As such an empirical study is an experiment about experimentation, it may be called a "meta-experiment". The basic design of a meta-experiment necessary for the investigation of EEE has been depicted in Table 2.

Individuals M, N, P and Q can properly be characterized as experimenters because they collect observations under two conditions which are identical in all aspects but one. The mean difference between the experimental and the control conditions is first calculated for each experimenter (e.g., [X] = [x]- [x'], [Z] = [z] - [z'], etc.). The mean of the differences obtained by the "+5" group of experimenters is compared to the mean of the differences obtained by the "-5" group of experimenters (i.e., the mean of [X], ..., and [Y] minus the mean of [Z] .... and [W]). Neither of Rosenthal & Fode's (1963a,b) studies followed the design shown in Table 2. Contrary to Jung's (1978) assertion, the studies summarized by Rosenthal & Rubin (1978) are not meta-experiments because they do not have a design similar to the one shown in Table 2. That is, Rosenthal & Fode (1963a,b) used an incorrect paradigm to study EEE.

One may be tempted to defend EEE in the following way. Many empirical studies have been cited in support of interpersonal expectancy effect in general (see Rosenthal & Rubin 1978), and teacher's expectancy effect in particular (see Brophy 1983; Dusek, Hall, & Meyer 1985; Rosenthal & Rubin 1971). Moreover, Harris & Rosenthal (1985) reported a meta-analytic study to support Rosenthal's (1973) model of teacher's expectancy effect. It may be suggested that the interpersonal expectancy effect and the teacher's expectancy effect provide support for the experimenter's expectancy effect. However, there are three reasons to question the validity of these studies as evidence for teacher's expectancy effect or interpersonal expectancy effect.

First, the term "expectancy" is often used in a misleading way in the pygmalion effects literature. As has been argued by Chow (1990), the putative teacher's expectancy effect is made up of four components, namely, (a) a particular psychological mechanism activated in a teacher upon receiving some information, (b) this mechanism inevitably determines unawarely the teacher's subsequent behavior towards the teacher's students, (c) students thus treated inevitably are informed, again unawarely, of the teacher's idea about them, and (d) students behave in the way consistent with the teacher's idea of them. Hence, it is incorrect to use the term "expectancy" to mean the information about a student, despite the fact that it is quite a common practice in the discussion of pygmalion effects.

The second issue is related to the first one. Components (a) through (d), moreover, are integrated into what Cooper (1979, 39) called an influence sequence (hence, the inevitably characterization). Harris & Rosenthal's (1985) correctly argued that acceptance of the influence sequence depended on demonstrating an inevitable sequence from component (a) to component (b) and another sequence from component (b) to the joint occurrence of components (c) and (d) [called "(c) and (d)" in subsequent discussion]. They attempted such a demonstration with multiple meta-analyses. They first demonstrated the (a) to (b) link with one set of studies. They then showed the (b) to "(c) and (d)" link with another set of studies. However, there was no indication as to whether or not the two sets of studies overlap at all (i.e., whether or not component (b) in the first set was the same as the component (b) in the second set). For this reason, Harris & Rosenthal's (1985) claim in regard to the influence sequence is questionable (Chow 1987a).

Third, some conceptual difficulties with the empirical studies of the teacher's expectancy effect may be pointed out. To demonstrate teacher's expectancy effects, an investigator informs a teacher something about a student. Chow (1990) shows that this information may, in fact, be interpreted differently by various teachers. For example, the information may be interpreted as a goal prescription, a theoretical prescription or a criterion of professional adequacy. The adequacy criterion interpretation is particularly relevant to the study of EEE. When told to replicate some earlier findings, a novice data collector may feel inadequate if the data collected are not as prescribed. That is to say, the data collectors self-consciously assess their own performance. This cannot be the unintentional process envisaged in the EEE argument.

Nonetheless, for the sake of argument, assume that the interpersonal expectancy effect and the teacher's expectancy effect were valid. Even given this assumption, the two said effects do not (and cannot) lend support to experimenter's expectancy effect for the following reason. To conduct an experiment is to collect observations under conditions which satisfy the formal requirement of one of Mill's (1973) four methods of scientific inquiry (Chow 1987c). Everyday activities (including teachers' activities) do not have to meet the formal requirement of a method of scientific inquiry. In other words, to be an experimenter is to play a unique role which is very different from that played by a teacher or anyone who is not conducting an experiment. Hence, it is incorrect to treat experimenter's expectancy effect as though it is similar to teacher's expectancy effect. Nor should experimenter's expectancy effect be treated as an exemplar of interpersonal expectancy effect.

2.

A Meta-experimentIn view of the fact that there is actually no valid evidence in support of EEE, a meta-experiment was conducted with Rosenthal & Fode's (1963a) photo-rating task. The basic requirement of an experiment for the experimenters in the to-be-reported meta-experiment was achieved by having photographs of smiling faces on half of the trials, and photographs of sad faces on the other half. What should the outcome of this meta-experiment be in order for the EEE claim to be true? This is a question about the theoretical prescriptions of the EEE hypothesis.

At the logical level, a theoretical prescription is a deduction from a to-be-tested hypothesis in the context of a set of auxiliary assumptions (Meehl 1978; 1990; Chow 1987c; 1989; 1992). An attempt to deduce an implication from EEE at once makes obvious a difficulty with the EEE claim, namely, that it is mute in EEE as to the theoretical properties of the EEE mechanism (apart from its being an unintentional process). That is, we do not know what the putative EEE is like as a psychological mechanism or how it renders possible Cooper's (1979) influence sequence.

Be that as it may, an implication may be derived jointly from (a) a literal reading of EEE, and (b) the general spirit of Rosenthal & Fode's (1963a,b) argument. This implication has been depicted in the top panel of Figure 1. The main features of the implication are (1) there is a difference between smiling faces and sad faces for every group of experimenters, and (2) there is a difference among the three differences identified in (1). These two features are predicated on the stipulation that to conduct an experiment is to collect data in at least two sets of conditions which are identical in all aspects but one (Boring 1954).

It helps to make explicit the assumption underlying the data pattern depicted in top panel of Figure 1. Assume, as in the EEE view, that an experimenter would unawarely convey cues to the subjects. It seems reasonable to assume that, in the absence of any extra-experimental influence, an experimenter may provide cues for the subjects to behave in a way consistent with common sense. That is, (a) give an empathic response of "success" to a smiling face, and (b) give an empathic response of "failure" to a sad face. Hence, experimenters in the "O" group were assumed to induce their subjects to give average ratings of +5 and -5 to smiling and sad faces, respectively.

If the EEE view were correct, subjects in the "+5" group might be induced to give positive ratings to all photographs. However, owing to their common sense, the subjects might rate smiling faces more positively than sad faces. By the same token, although subjects in the "-5" groups might be induced to give negative ratings to all photographs as a result of EEE, they might rate sad faces more negatively than smiling faces. Hence, the necessary condition for accepting EEE is an interaction between (a) the "expectancy" information given and (b) the type of face.

3. Method

3. 1. Experimenters

Twenty students in a third-year psychology course at the University of Wollongong (New South Wales, Australia) served as experimenters in the meta-experiment (i.e., they administered the photo-rating task under two conditions which were comparable in all aspects, but one). Their participation satisfied a course requirement. They were randomly divided into three groups; 7 in the "+5" group, 6 in the "0" group, and 7 in the "-5" group. These experimenters tested a different number of subjects.

3.2. Subjects

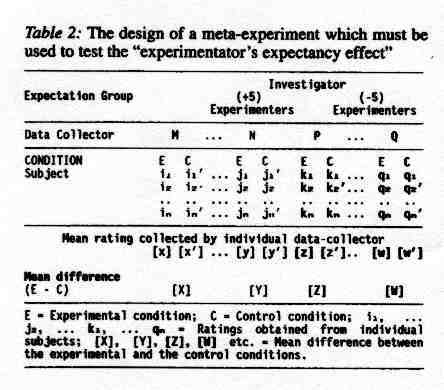

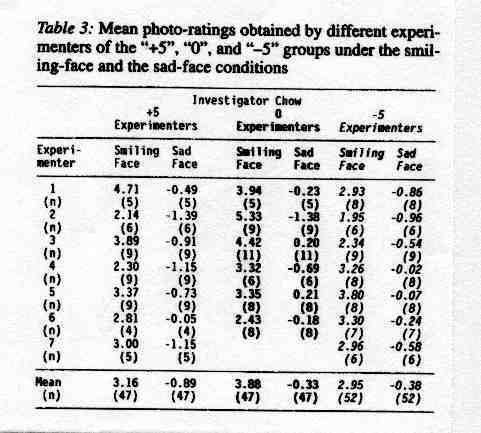

One hundred and forty-six first-year psychology students at the University of Wollongong (New South Wales, Australia) served as subjects in the meta-experiments to satisfy a course requirement, (i.e., they rated the photographs). They were randomly assigned to the twenty experimenters in groups of various sizes (see Table 3). All subjects had normal (or corrected to normal) vision.

3.3. Designs

There were two designs at two levels of abstraction because this study was a meta-experiment. First, as this study was an attempt to experimentally study experimentation, its design had to be an experiment to the investigator. At the same time, each of the third-year students responsible for collecting data should be an experimenter (i.e., they had to conduct an experiment). As may be seen from Table 3, the design was a between-groups 1-factor, 3-level design to the investigator. The independent variable was "Expectancy" given to the experimenters (viz., the to-be-replicated mean rating allegedly obtained by previous experimenters). The three levels of this independent variable were +5, 0, and -5; they were the to-be-replicated mean ratings intimated to the 3 groups of experimenters. Second, from the perspective of the twenty experimenters, it was a repeated-measures 1-factor, 2-level design. The independent variable was the type of facial expression whose two levels were smiling face and sad face.

3.4. Materials

Over two hundred photographs of faces were collected from a local newspaper at Wollongong (New South Wales, Australia). Forty-eight photographs were chosen such that there were 12 smiling male faces, 12 sad male faces, 12 smiling female faces, and 12 sad female faces. Forty-eight overhead projector slides were prepared with these photographs. Booklets of response scales (from - 10 to + 10) were used to collect rating responses from the subjects (see Appendix A of Rosenthal & Fode 1963a, 506).

3.5. Procedure

The meta-experiment consisted of two phases. In Phase I, three groups of experimenters were trained separately. All of them were first given the photo-rating task by the investigator as though they were subjects in an experiment. No mention was made as to what the expected result might be at this stage. The purpose of this phase was to ensure that the prospective experimenters knew (a) that half of the faces were smiling faces; and that the other half were sad faces, (b) that the two types of face were arranged in a random order, and (c) how to explain the task to their respective subjects in Phase II of the meta-experiment.

As was in Rosenthal & Fode's (1963a) study, different information regarding putative to-be-replicated mean ratings was intimated to the three groups of experimenters at the end of Phase I when they were fully conversant with the photo-rating task. More specifically, the experimenters in the "+5", "0", and "-5" groups were informed that previous investigators obtained a mean rating of +5, 0, and -5, respectively.

All experimenters were given a copy of the appropriate section of Rosenthal & Fode's (1963a) "Instructions to subjects" in Phase II. They were asked to read the instruction verbatim to their subjects. This procedure ensured that the procedural feature of this meta-experiment was as close to Rosenthal & Fode's (1963a) study as possible.

An experimenter administered the photo-rating task to his or her subjects as a group in Phase II of the meta-experiment. The instruction regarding the photo-rating task was first read to the subjects. The experimenter then presented 48 photographs at a rate of approximately 5 seconds per slide with an overhead projector. The 48 smiling and sad faces were presented in a random order. Subjects were to rate whether or not the face was one of someone who had recently experienced a success or a failure. They were to indicate their responses on a 21-point scale. A rating of -10 indicated extreme failure, and a rating of +10 meant extreme success.

4. Results

The mean photo-rating responses obtained by different experimenters of the "+5," "0," and "-5" groups under the "Smiling Face" and "Sad Face" conditions are shown in Table 3, and graphically represented in the bottom panel of Figure 1. As the purpose of this study was to investigate EEE experimentally, data analysis was conducted from the investigator's perspective. Consequently, the design shown in Table 3 represents a split-plot 3x2 design.

The first factor, "Expectancy", was a between-groups factor with 3 levels (viz., an expected mean rating of "+5", "0", and "-5"). "Type of Face" was the second factor; it was a repeated-measures factor with 2 levels (viz., "Smiling Face" and "Sad Face"). Neither the main effect of "Expectancy," nor the interaction between "Expectancy" and "Type of Face" of this split-plot 3x2 factorial ANOVA was significant. The mean ratings given to smiling and sad faces were 3.32 and -0.527, respectively. This main effect was significant, F[1,126] = 76.68, p<.05; MSerror = 3.14. There was an alternative way of testing EEE (see Rosenthal, 1976, 110). The difference between the smiling face and sad face conditions was first calculated for every subject in every "Expectancy" condition. The resultant three sets of differences were subjected to a between-groups 1-factor, 3-level ANOVA. The main effect of "Expectancy" was not significant. The mean differences between the smiling face and sad face conditions for each of the three groups of experimenters were 4.06, 4.21, and 3.33, respectively, for the "+5", "0", and "-5" groups.

5. Discussion

It has been shown that an interaction between "Expectancy" and "Type of Face" is the necessary condition for the tenability of EEE. However, such an interaction was not obtained in the present meta-experiment. Nor was there a significant main effect of "Expectancy". It has to be concluded that there is no empirical support for EEE.

However, the EEE claim might be defended in the following way. The neutral faces used by Rosenthal & Fode (1963a) were ambiguous stimuli in a way the smiling and sad faces used in the present meta-experiment were not. Perhaps EEE is found only with ambiguous stimuli. It may even be suggested that EEE is found only when the test condition is ambiguous. This defence of EEE is unsatisfactory for the following reasons. First, Rosenthal & Fode (1963a) conducted an experiment when a meta-experiment was required. Their student data-collectors did not conduct an experiment. Second, ambiguity (of stimuli or of the test condition) is not a component of EEE. Third, EEE is presented (and accepted by many) as an inherent weakness of the experimental approach. That is, it should be found in ALL experiments, including the one conducted by the 20 experimenters in the present study.

Furthermore, it is held in the EEE view that neither experimenters nor subjects are aware of the subtle cues involved. For example, the results obtained by Rosenthal & Fode (1963a) are often cited in many textbooks to show that an experimenter would unawarely behave in a way favorable to the to-be-tested hypothesis. This is the very feature which renders EEE so fatal to experimentation if EEE can be validly demonstrated. To accept such an interpretation is to accept the assumptions that (1) Rosenthal & Fode's (1963a) data-collectors were not under any extra-research pressure (e.g., the data-collectors considered the information intimated to them as a theoretical hypothesis, and not as a criterion of competence), and (2) the data-collectors were unaware of the influence due to their own vested interest in a paticular outcome. However, neither of these two assumptions is warranted.

Rosenthal & Fode's (1963a) data-collectors were told they would be paid more money (viz., $2 an hour instead of $1) if their "results come out properly - as expected" (Rosenthal & Fode 1963a, 507). They had a pecuniary interest to behave in a particular way. Moreover, the information intimated to the data-collectors (who were undergraduate students) might be interpreted by the students in a non-theoretical way. For example, the students might think that failure to obtain the "proper" result indicated incompetence on their part (i.e., the student data-collectors did not treat the information about "previous" findings as a theoretical hypothesis). That is, there was psychological pressure other than the putative EEE at work. In other words, at least two obvious sources of extra-research pressure were imposed on Rosenthal & Fode's (1963a) student data-collectors. Moreover, both of these possibilities implicate a conscious decision on the part of the student data-collectors (i.e., the data-collectors could not help being aware of the decision).

To recapitulate, EEE is rejected here because (a) Rosenthal & Fode (1963a) did not conduct a meta-experiment, and (b) the data pattern in the bottom panel does not match that in the top panel of Figure 1. Both of these objections have a heavy methodological overtone. Moreover, the present argument against EEE is made from the perspective of Popper's (1968) conjectures and refutations (or more commonly known as hypothetical deductive) view of science. Some metatheoretical issues may arise as a result of these characteristics.

First, it might be asked whether or not rejecting EEE for a methodological reason is too excessive. This is not a good defense for EEE because it effectively denies the distinction between experiment and measurement. An irony is that Rosenthal (1976) seemed to have this distinction in mind when he said, "But much, perhaps most, psychological research is not of this sort (i.e., the measurement exercise as schematically represented in Table 1). Most psychological research is likely to involve the assessment of the effects of two or more experimental conditions on the responses of the subject (i.e., an experiment). If a certain type of experimenter tends to obtain slower learning from his subjects, the 'results of this experiment' are affected not at all so long as his effect is constant over the different conditions of the experiment. Experimenter effects on means do not necessarily imply effects on mean differences." (Rosenthal 1976, 110; my explications in parentheses and italic; Rosenthal's own quotation marks.)

The very reason to have a control condition in an experiment is to make it possible to rule out, as explanations, the various unintended effects on a measurement exercise carried out in the course of a non-experimental research (Chow 1987a;1992).

The second metatheoretical issue is whether or not it is proper to adopt Popper's (1968) approach when EEE is being assessed. This question may arise as a result of the prescriptive or "normative" overtone of Popper's (1968) hypothetical deductive approach. For example, Barnes (1982) found Popper's (1968) normative view of science objectionable. However, to discuss this issue would mean a lengthy digression. Nonetheless, suffice to say that the rationale of Rosenthal & Fode's (1963a, b) studies is not inconsistent with the hypothetical deductive schema. At the same time, whether or not Popper's view of science is correct is not the bone of contention between Rosenthal & Fode's (1963a) and the present study. The important point is that their student data-collectors were not experimenters.

A third metatheoretical issue may arise because some researchers argue that empirical data cannot be used to substantive or refute theory because all observations are theory-dependent. These investigators then conclude that all argument based on empirical data are necessarily circular (Gergen 1991). It suffices to say here that, contrary to this constructionist contention, empirical data can be used in a non-circular way to corroborate or refute theory if a distinction is made between to-be-explained observation and data used to substantiate an explanatory theory (see Chow's [in press] prior data versus evidential data distinction).

The fourth metatheoretical issue is concerned with the sophistication of the EEE view. It may be argued that data from this meta-experiment may be difficult only for a naive version of EEE. However, there are more sophisticated versions of EEE. For example, there are various suggestions as to how EEE might be functionally related to various factors (e.g., anxiety level of both "experimenters" and "subjects", need for approval of "experimenters" or "subjects", characteristics of the laboratory, etc.).

It is not the intention of this critique to preclude a satisfactory version of EEE. The objective of this paper is to point out an important issue, namely, that any version of EEE must be substantiated with data from a meta-experiment. Moreover, one problem with the current formulations of EEE may be pointed out. Knowing that Variables A and B are functionally related is still not informative as to WHY, or BY VIRTUE OF WHAT MECHANISMS, Variables A and B are so related. To be useful as an explanatory concept, the theoretical properties of EEE must be well-defined. That current formulations of EEE are unsatisfactory may be seen from a paradox.

Suppose that data of this meta-experiment were consistent with the pattern shown in either the top panel of Figure 1. The EEE argument is, hence, supported. As it turns out, data of this meta-experiment (see the bottom panel of Figure 1) are inconsistent with that in the top panel of Figure 1. However, I am critical of the EEE argument at the conceptual, as well as methodological, level to begin with. May it not be the case that EEE has induced me (without my being aware of it) to influence my experimenters to affect their respective subjects in a way inconsistent with EEE? In other words, regardless of the outcome of ANY meta-experiment, EEE is supported. Seen in this light, data from this meta-experiment support EEE after all. This paradox has been used to defend the term "expectancy effects" (see Babad 1978; Chubin 1978; Jussim 1986). This paradox arises because the EEE formulation is vague. We know nothing about the properties of the putative psychological mechanism. Consequently, no criteria of rejection can be logically deduced from EEE. The absence of an independent index of EEE renders it not testable.

The fifth meta-theoretical issue is how fatal the present critique is to EEE when only one study among many studies is being discussed. To begin with, the distinction between experimental and non-experimental studies is never made in current discussions of EEE. Rosenthal's (1976) review of experimenter effects should be read with reservations in view of the important differences between the schemata represented in Tables 1 and 2. The present concentration on Rosenthal & Fode's (1963a) study maybe justified in terms of the type-token distinction. Discrete items become tokens of a type when (a) these discrete items share something in common and (b) this shared property is a defining characteristic of the type. If the defining property of the type is found wanting, we can similarly find all tokens of the type wanting. Rosenthal & Fode's (1963a) study is a good illustration of why an experiment is NOT a meta-experiment. Any study of EEE with the design used by Rosenthal & Fode (1963a,b) is invalid for the same reason. For examples, studies by Intons-Peterson (1983) and Intons-Peterson & White (1981) can be similarly faulted in their capacity as demonstrations of EEE.

Pedagogical Implications of EEE. The acceptance of EEE in textbooks is very ironic because it actually undermines what students are supposed to learn about research methodology, particularly (1) the meaning and role of the crucial term, control, in the context of experimentation, and (2) the important differences between experimental and non-experimental research (viz., the presence and absence, respectively, of control). The irony arises because, at the logical level, an experiment with appropriate controls is the only means to rule out a putative researcher effect (see the aforementioned quote from Rosenthal 1976, 110) because experimental controls serve to exclude alternative explanations of experimental results (including the sort of procedural artifacts characterized as EEE).

Apart from this irony, the acceptance of EEE in textbooks is also unacceptable at the pedagogical level. Students are exhorted to be conceptually rigorous. Yet, as has been shown, the empirical basis of EEE is not valid. Students are advised to use precise terminology in an unambiguous manner; and they are introduced to the differences between the experimental and the non-experimental approaches to research. However, the terms experiment and experimenter are used as though they are synonymous with "research" and "researcher", respectively, when EEE is being discussed. Moreover, the term, experiment, is used indiscriminately to refer to any kind of empirical research, experimental or otherwise. For example, it has been suggested that the personal characteristics of an "experimenter" may influence the "experimental" outcome. The evidence cited in support of this assertion is the observation that the outcome of an interview depended on the race of the interviewer and the interviewees (Rosenthal 1976). This is unacceptable because conducting an interview is not the same as conducting an experiment. An undesirable pedagogical consequence of this state of affairs is that we do not follow the advice we give our students.

Note:

This research was partly supported by Australian Research Council Grant, and partly by a grant from the National Sciences and Engineering Research Council of Canada (No. OGPOO42159). I thank David Walters for briefing the experimenters. I am also grateful to the anonymous reviewers for their comments.References

Babad, E.Y. (1978). On the biases of psychologists. Behavioral and Brain Sciences, 3, 387-388.

Barber, T.X. & Silver, M.J. (1968a). Fact, fiction, and the experiment bias effect. Psychological Bulletin Monograph Supplement, 70 (6), Part 2, 1-29.

Barber, T.X. & Silver, M.J. (1968b). Pitfalls in data analysis and interpretation: A reply to Rosenthal. Psychological Bulletin Monograph Supplement, 70 (6), Part 2, 48-62.

Barnes, B. (1982). T.S. Kuhn and social science. New York: Columbia University Press.

Boring, E.G. (1954). The nature and history of experimental control. American Journal of Psychology, 67, 573-589.

Brophy, J.E. (1983). Research on the self-fulfilling prophecy and teacher expectations. Journal of Educational Psychology, 75, 631-661.

Chow, S.L. (1987a). Meta-analysis of pragmatic and theoretical research. Journal of Psychology, 12, 95-100.

Chow, S.L. (1987b). Some reflections on Harris and Rosenthal's 31 meta-analyses. Journal of Psychology, 12, 95-100.

Chow, S.L. (1987c). Experimental psychology: Rationale, procedures and issues. Calgary: Detselig.

Chow, S.L. (1989). Significance tests and deduction: Reply to Folger. Psychological Bulletin, 106, 161-165.

Chow, S.L. (1990). Teacher's expectancy and its effects: A tutorial review. Zeitschrift für Pädagogische Psychologie, 4, 147-159.

Chow, S.L. (1992). Research methods in psychology: A primer. Calgary: Detselig.

Chow, S.L. (in press). Acceptance of a theory: Justification or rhetoric. Journal for the Theory of Social Behaviour.

Chubin, D.E. (1978). Inattention to expectancy: resistance to a knowledge claim. Behavioral and Brain Sciences, 3, 390-391.

Cohen, M. R., & Nagel, E. (1934). An introduction to logic and scientific method. London: Routledge & Kagan Paul.

Cooper, H.M. (1979). Pygmalion grows up: A model for teacher expectation communication and performance influence. Review of Educational Research, 49, 389-410.

Dusek, J.B., Hall, V.C. & Meyer, W.J. (Eds.) (1985). Teacher expectancies. Hillsdale, New Jersey: Lawrence Erlbaum.

Gergen, K.J. (1991). Emerging challenges for theory and psychology. Theory & Psychology, 1, 13-35.

Harris, M.J. & Rosenthal, R. (1985). Mediation of interpersonal expectancy effects: 31 meta-analyses. Psychological Bulletin, 97, 363-386.

Intons-Peterson, M.J. (1983). Imagery paradigms: How valuable are they to experimenters' expectations? Journal of Experimental Psychology: Human Perception and Performance, 9, 394-412.

Intons-Peterson, M.J. & White, A.R. (1981). Experimenter naiveté and imaginal judgements. Journal of Experimental Psychology: Human Perception and Performance, 7, 833-843.

Jussim, L. (1986). Self-fulfilling prophecies: A theoretical and integrative review. Psychological Review, 93, 429-445.

Jung, J. (1978). Self-negating functions of self-fulfilling prophecies. Behavioral and Brain Sciences, 3, 397-398.

Meehl, P.E. (1978). Theoretical risks and tabular asterisks: Sir Karl, Sir Ronald, and the slow progress of soft psychology. Journal of Consulting and Clinical Psychology, 46, 806-834.

Meehl, P.E. (1990). Appraising and amending theories: The strategy of Lakatosian defense and two principles that warrant it. Psychological Inquiry, 1, 108-141.

Mill, J.S. (1973). A system of logic: Ratiocinative and inductive. Toronto: University of Toronto Press.

Popper, K. R. (1968). Conjectures and refutations. The growth of scientific knowledge. New York: Harper & Row.

Rosenthal, R. (1969). Interpersonal expectations. Effects of the experimenter's hypothesis. In Rosenthal, R. & Rosnow, R. L. (Eds.). Artifacts in Behavioral research. New York: Academic Press, 181-277.

Rosenthal, R. (1973). The Pygmalion effect lives. Psychology Today (September issue), 56-62.

Rosenthal, R. (1976). Experimenter effects in behavioral research. New York: Appleton-Century-Crofts.

Rosenthal, R. & Fode, K.L. (1963a). Psychology of the scientist: V. Three experiments in experimenter bias. Psychological Reports, 12, 491-511.

Rosenthal, R. & Fode, K.L. (1963b). The effect of experimenter bias on the performance of the albino rat. Behavioral Science, 8, 183-189.

Rosenthal, R. & Rosnow, R.L. (1969). The volunteer subject. In Rosenthal, R. & Rosnow, R.L. (Eds.). Artifact in behavioral research, New York: Academic Press, 59-118.

Rosenthal, R. & Rosnow, R.L. (1975). The volunteer subject. New York: John Wiley.

Rosenthal, R. & Rubin, D.B. (1971). Pygmalion reaffirmed. In Elashoff, J.D. & Snow, R.E. (Eds.). Pygmalion reconsidered. Worthington, Ohio: Charles A. Jones Publishing Co.

Rosenthal, R. & Rubin, D.B. (1978). Interpersonal expectancy effects: The first 345 studies. Behavioral and Brain Sciences, 3, 377-415.

Stevens, S.S. (1968). Measurement, statistics, and the schemapiric view. Science, 161, 849-856.