It becomes a hypothesis that this notion of symbols includes symbols that we humans use everyday in our lives.

Situated cognition research rejects the hypothesis that neurological structures and processes are similar in kind to the symbols we create and use in our everyday lives. The symbolic approach, as described by Vera and Simon (V&S, in preparation), conflates neurological structures and processes with physical representations that we perceive and manipulate in our environment (e.g., a journal article) and experiences of representing in our imagination (e.g., visualizing or talking to ourselves). This category error distorts the nature of perception, the nature of conceptual interpretation as we comprehend written plans, and in general the adaptive nature of every thought and action. At its heart, the symbolic approach confuses an agent's deliberated action--in sequences of behavior over time, as cycles of reperceiving and commenting (e.g., in speech, writing, drawing)--with what occurs within every ongoing coordination (Dewey, 1896).

Put another way, the symbolic approach conflates "first person" representations in our environment (e.g., utterances and drawings) with "third person" representations (e.g., mappings a neurobiologist finds between sensory surfaces and neural structures) (Slezak, in preparation). Saying that "the information in DNA and RNA is certainly represented symbolically" (p. 401) obscures what happens when an agent represents something or interprets something symbolically. DNA sequences aren't symbols in the 1st person sense because they are not interpreted by the agent possessing them as meaning something. But a scientist looking inside can view DNA sequences as symbols in the 3rd person sense, by showing that they constitute a code that "contains information" about the agent's phenotype2. By ignoring the shift in frame of reference between the agent and the scientist looking inside, V&S ignore the role of perceiving and coordinating action in creating and using representations. Indeed, saying "the way in which symbols are represented in the brain is not known" (p. 3) oddly mixes the two points of view (whose symbols are represented?).

In effect, the symbolic approach obscures the nature of representations. A corollary is that the symbolic approach inadequately characterizes the nature, origin, and value of symbolic models like SOAR (Clancey, 1991b). Reformulating the relation of symbolic cognitive models to human behavior neither repudiates their value for understanding human behavior, nor the value of symbolic modeling techniques (Clancey, 1992a). We must separate the content of cognitive models and metatheoretic claims made about them from the modeling methods. We could for example, use classification hierarchies or state transition networks to model neural interactions, without claiming that these models are also stored in the brain. More to the point, the claims of situated cognition lead us to reformulate knowledge acquisition, the process of constructing an expert system (Clancey, 1989). Rather than viewing it as transfer of expertise--that is, extracting "knowledge" already pre-represented, stored structures in the expert's memory (e.g., stored rules or semantic nets)--we view it as a process of creating representations, inventing languages, and in general formulating models for the first time. In effect, reinterpreting the meaning of cognitive models relative to human memory leads us to re-examine the scientific process of model construction (Gregory, 1988), the relation of models and everyday activity (Lave, 1988), and the use of models in instruction (Schön, 1987).

V&S state several times that "complex human behavior can be and has been described and simulated effectively in physical symbol systems." (p. 42) Citing an example of a human driving a car, they say, "The human part of this sequence of events...can be modeled by a symbolic pattern recognition cum production system." (p. 13) But situated cognition does not argue that "humans and their interactions with the world cannot be understood using symbol-system models and methodology"(p. 1). The issue is not understanding or modeling, per se. The claim is that the model is merely an abstraction, a description and generator of behavior patterns over time, not a mechanism equivalent to human capability.

Symbolic models have explanatory value as psychological descriptions. In stable environments a symbolic model can serve to control a useful robot agent, like NAVLAB. Regardless of what we later understand about the brain or cognition, such models are unlikely to go away. For example, descriptions of patterns of behavior, in terms of goals, beliefs, and strategies, are especially valuable for instruction (e.g., Clancey, 1988). But arguing that all behavior can be represented as symbolic models (p. 43) misses the point: We can model anything symbolically. But what is the residue? What can people do that today's computer programs cannot? What remains to be replicated?

Our goal of understanding the brain and replicating human intelligence with computers will no longer be served well by using the term "symbol" (or "representation") to refer interchangeably to experiences of representing something to oneself, to neural structures/processes, and to forms in the world such as words in a computer program. Saying that "Brooks' creatures are very good examples of orthodox symbol systems" makes "symbol system" of little heuristic value for robot design. This meaning of "symbol system" lumps together vending machines, conventional expert systems, situated automata (Maes, 1990), SOAR, cats, and people. What then does "symbol system" explain? What value does it provide for better understanding the human brain? Perhaps V&S would say it moves us out of the behaviorist camp, but then they are still fighting the last battle. The distinction is too coarse for improving mechanisms today. NAVLAB's neural network is clearly not the kind of computational mechanism described in Human Problem Solving (Newell and Simon, 1972). Agre (1988), Brooks (1991), Rosenschein (1985) and the connectionists are moving us away from a flat "symbolic" architecture--leading us as designers to distinguish between our interpretations of structures inside the robot and the robot's interpretations of structures it perceptually creates and manipulates (Clancey, 1991b).

Some of the interesting questions for advancing cognitive science today concern how children invent representations (Bamberger, 1991), how immediate behavior is adaptive (Laird and Rosenbloom, 1990), the role of metaphor in theory formation (Schön, 1979), the social organization of learning (Roschelle and Clancey, 1992), the relation of affect and belief (Iran-Nejad, 1984), and so on. These psychological issues are raised by the progenitors of situated action, in work ranging from Dewey, Collingwood, and Bartlett to Piaget, Vygotsky, and Bateson. But we needn't look so far afield: Recent work in neuroscience, language, and learning discusses the relation between situated cognition and symbolic theories (e.g. Lakoff, 1987; Sacks, 1987; Rosenfield, 1988; Edelman, 1992; Bickhard and Richie, 1983), providing a useful starting point for understanding social critiques (Lave, 1988; Suchman, 1987).

I agree with Vera and Simon that SA research needs to be reformulated

in terms useful to psychologists and cognitive scientists. There is no

one single answer for what "situated" means to researchers

in diverse fields such as robotics, psychology, education, or organizational

theory. Each community will make their own interpretation. Necessarily,

my assertions defining situated cognition in terms of neuropsychology may

be uninteresting or incomprehensible to Lave or Suchman3. My

only goal is to do justice to their insights in a way useful to cognitive

science and AI.

Five central claims of SA are summarized here. In subsequent sections of the paper, I show how these claims can be grounded in neuropsychology, that is, relating cognitive theories of problem solving, knowledge, memory, etc. to neurological structures and processes.

1) The representation storehouse view of memory confuses structures in the brain with physical forms that are created and used in speaking, drawing, writing, etc.

Assuming that the researcher's conjectured representational language and models pre-exist in the subject's brain has led cognitive science to de-emphasize how people create representations everyday, reducing learning to syntactic modification of the modeler/teacher's presupplied ontology of standard notations (Iran-Nejad, 1984; Rosenfield, 1988). Representing, comprehending, meaning, speaking, conceiving, etc. are reified into acts of manipulating representations in a hidden way called "reasoning" (Ryle, 1949). Stored-schema models tend to view meaning as mapping between given information and stored conceptual primitives, facts, and rules; activity as executing rules or scripts; problems as given descriptions in an existing, shared notation (representation language); information as given, selected, or filtered from the environment; and concepts as stored descriptions, such as dictionary definitions (Reeke and Edelman, 1988; Lakoff, 1987).

2) Schema models wrongly view learning as a secondary phenomenon, necessarily involving representation (reflection).

No human behavior is strictly rote (Bartlett, 1932). Learning occurs with every act of seeing and speaking. Categories are not stored things, but always adapted ways of talking, seeing, relating, or in general, ways of coordinating behavior. A person interpreting a recipe, diagram, or journal article is conceiving, not merely retrieving and assembling primitive meanings and definitions according to rules of grammar and discourse structure (Collingwood, 1938; Tyler, 1978; Suchman; 1987). Information is created by the observer, not given, because comprehending is conceiving, not retrieving and matching (Reeke and Edelman, 1988; Gregory, 1988).

3) Integration of perceiving and moving and higher order serial organizations is dialectic--coherent subprocesses arise together--not via linear causality or parallelism.

Perceiving, thinking, and moving always occur together, as coherent coordinations of activity (Dewey, 1896). Representing can occur within the brain (e.g., visualizing), but always involves sensorimotor aspects and is interactive, even though it may be private (i.e., representing without use of representational things in the environment). Representing is always an act of perceiving; we can't strictly separate perceiving and moving (e.g., when speaking I am also comprehending what I am saying)--coordinations are circuits.

4) Practice cannot be reduced to theory.

The source of cultural commonalities is not a set of common laws, grammars,

and behavior schemas stored in individual brains (Lave, 1988). There is

no locus of control in human activity, either neurobiological or social.

Our ability to coordinate our activities without mediating theories is

the foundation for our ability to agree on courses of action and theorize

in similar ways (rather than the other way around) (Lave and Wenger, 1991;

Lakoff, 1987). Social processes involve organization of a larger system,

transcending individual awareness and control, not merely transferring

representations (as in speech). Models of social interactions are not required

for their occurrence and reproduction (cf. Bartlett's (1932) discussion

of soccer, p. 277).

The difficulty with the identification of rule and intuition is that it implies that the knowledge in our heads is more explicit than the practices that might reveal it. If rules are discursive directions for action then they cannot be 'nuances' or feelings of 'subtle relations.' (Tyler, 1978, p. 153).

Indeed, as we perceive patterns and articulate theories to explain them, we become increasingly alienated from the complexity of activity itself (Tyler, 1978). That is, as we perceptually abstract nature and human behavior in pattern descriptions (e.g., discourse patterns), and explain the occurrence of such regularities in theoretical laws (e.g., "facility conditions" explaining why discourse patterns exist), we become more distant from the phenomena we are studying. The apparent stability and completeness of our theories is always relative to our artifacts, ongoing purposes, and activities.

5) Situated cognition relates to ideas in the philosophy of science, concerning the nature of mechanisms and pattern descriptions.

Several ideas about theories are intricately related in stored-schema

models of cognition:

(2) Scientific theories are the epitome of knowledge (e.g., the emphasis on "deep models" in AI research);

(3) Rational behavior obeys general laws of logic such as modus-ponens;

(4) Human knowledge consists of facts and laws stored in memory, causing observed regularities in behavior.

Many people have attacked these ideas, suggesting in addition that they are distorting human activity as diverse as architectural design (Alexander, 1979), organizational learning (Nonaka, 1991), professional training (Dreyfus and Dreyfus, 1986; Schön, 1987), musical invention (Bamberger, 1991), design of complex devices (Suchman, 1987), use of computers in business (Winograd and Flores, 1986), and scientific progress itself (Gleick, 1987).

To understand the SA argument, we should consider up front the common,

but misleading misinterpretations of what "situated" means. Some of these

interpretations arise because social scientists are trying to make contact

with cognitive theories, but lack the background to speak in the language

a cognitive scientist expects. Situated cognition research is:

2. Not rejecting the value of planning and representations in everyday life, rather seeking to explain how they are created and used in already coordinated activity.

3. Not claiming that representing does not occur internally, in the individual brain (e.g., imagining a scene or speaking silently to ourselves), rather seeking to explain how perceiving and comprehending are co-organized;

4. Not in itself a prescription for learning ("situated learning"), rather claiming that learning is occurring with every human interaction.

5. Not disputing the descriptive value of schema models for cognitive psychology, rather attempting to explain how such regularities develop in behavior, and what flexibility for improvisation and basis in previous, pre-linguistic sensorimotor grounding is not captured by symbolic models.

6. Not claiming that current computer programs can't construct a problem space or action alternatives, rather revealing how programs can't step outside pre-stored ontologies.

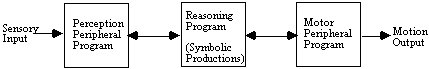

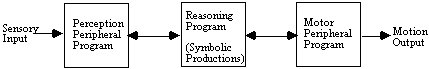

To understand the implications of SA for psychology, we must first take away the memory of stored 1st person representation structures. Second, we must replace the CPU view of processing, involving peripheral perception and motor systems, by a dialectic mechanism that simultaneously coordinates perception-action. Third, we must move deliberation out to the behavior of the agent, involving cycles of perception and action. Fourth, we must view learning not as a process of storing new programs, but as part of adaptive re-coordination that occurs with every behavior, on top of which reflective representation (framing, story-telling, and theorizing) is based (Schön, 1990).

In subsequent sections, I explain the dialectic view of neural architecture and its implications for theories of representation and learning. In section 5 I summarize the separate lines of arguments from the external (social) and internal (cognitive) perspectives, using a table to summarize the sometimes dramatic shifts in our understanding of meaning, concepts, and memory. I conclude by summarizing the broader implications, which are already well underway in studies of organizational learning, business process modeling, and design of complex computer systems.

2. To be clear, such a mapping (e.g., "a sequence of amino acids, CAGCTA corresponds to such and such") is a 1st person representation to the scientist; that is, it is an expression the scientist creates and perceives as having meaning.

3. Even today’s "cognitive neuropsychologists" make

little contact with symbolic theories. For simplicity, I will refer interchangeably

to "situated cognition research" and "situated action theory" (abbreviated

"SA"), not as a historical description of a uniform community or set of

shared beliefs and theories, but as a useful synthesis.

The symbolic approach distinguishes between data and program, software and hardware, serial and parallel processing. A basic assumption is that perception and reasoning are possible without acting. Reasoning is contrasted with immediate, non-deliberated behavior. Even the parallel-distributed view describes data as processed independently in different modules, with results passed to other modules downstream (Farah, in preparation, p. 31, 34). In attempting to put the pieces of perception, cognition, and motor operations back together, it is unclear how to make reflexes sensitive to cognitive goals (Laird and Rosenbloom, 1990; Lewis, et al., 1990). This dichotomy arises because deliberation is opposed with, and in the architecture disjoint from, immediate perception-action coordination. Rather than viewing immediate perception-action coordination as the basis of cognition, cognition is supposed to supplant and improve upon "merely reactive" behavior.

According to the neuropsychological interpretation of situated action, the neural structures and processes that coordinate perception and action are created during activity, not retrieved and rotely applied, merely reconstructed, or calculated via stored rules and pattern descriptions (Bartlett, 1932; Freeman, 1991; Edelman, 1992). That is, the physical components of the brain, at the level of neuronal groups of hundreds and thousands of neurons, are always new--not predetermined and causally interacting in the sense of most machines we know--but coming into being during the activity itself, through a process of reactivation, competitive selection, and composition (Edelman, 1987; Smoliar, 1989). In particular, this architecture coordinates perception and action without intermediate decoding and encoding into descriptions of the world or of how behavior will appear to an observer, thus avoiding combinatoric search and matching (as well as problems of symbol grounding and the frame axiom problem) (Bickhard and Richie, 1983). With such a self-organizing mechanism--non-serial, non-parallel, but dialectic--coordination and learning are possible without deliberation (Dewey, 1896).

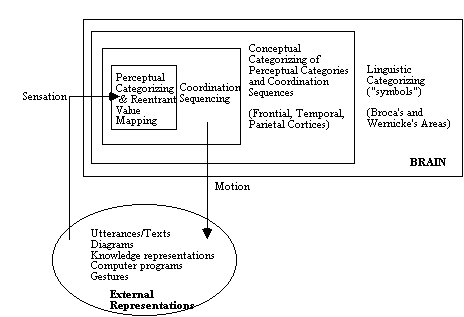

As Figure 2 illustrates, SA has indeed led Edelman "down novel paths

which cannot be followed along traditional information processing lines"

(p. 19). In contrast with the classical, symbolic architecture, the

processors co-configure each other. All action is embodied because

perception and action arise together automatically: Learning is inherently

"situated" because every new activation is part of an ongoing perception-action

coordination. Situated activity is not a kind of action, but the nature

of animal interaction at all times, in contrast with most machines we know.

This is not merely a claim that context is important, but what constitutes

the context, how you categorize the world, arises together with

processes that are coordinating physical activity. To be perceiving the

world is to be acting in it--not in a linear input-output relation (actñobserveñchange)--but

dialectically, so that what I am perceiving and how I am moving co-determine

each other:

Categorization does not occur according to a computerlike program in a sensory area which then executes a program to give a particular motor output. Instead, sensorimotor activity over the whole mapping selects neuronal groups that give the appropriate output or behavior, resulting in categorization...always occurs in reference to internal criteria of value...(Edelman, 1992, p. 89)

We can view the cortex and neural structures in general as the hardware, but there are no fixed structural components by which symbols are stored, transferred, or manipulated as structures, in the sense of data structures in a computer memory. Rather, the software is the hardware being activated and configured "in line" as part of the living neural connection between sensory and motor systems. A schema is the hardware being chronologically and compositionally activated, by a process that is always adapted to other ongoing coordinations and is always a generalization of previous coordinations (Bartlett, 1932; Vygotsky, 1934). (In contrast, by the symbolic view schemas are 1st person representations of how the world or behavior appears, e.g., beliefs, scripts, strategies.)

Every activation reinforces physical connections, biasing active hardware to be reincorporated in future compositions, bearing the same temporal relations to perceptual and conceptual maps. Regularities of behavior, including an observer's construction of analogies, develop because every perceptual-motor coordination--in both agent and observer--generalizes, includes, and correlates previous perceptions and coordinations. The nesting of boxes in Figure 2 illustrates how "reentrancy" or the feedforward aspect of past organizations (categories and sequential coordinations of multimodal sensory and motor systems) biases the present, ongoing activation.

Other interesting aspects of this architecture, yet to be explained in any detail, include: Impasses in sequential processes feed back to produce awareness of failures; multimodal coordinations may occur simultaneously; acts of imagination bias future coordination; speaking occurs dialectically with self-comprehension, etc. Edelman also emphasizes the correlation of internal homeostatic systems (via brain stem, hypothalamus, etc.) in the hippocampus, amygdal, and septum prior to incorporation in global mapping coordination sequencing and conceptualization. Thus categorizations of "value" are integrated with the lowest levels of perceptual categorizing, making emotion integral, not a secondary coloration or distortion.

Everything stated here is posed as a highly simplified set of interleaving hypotheses about neural processes and psychological effects pertaining to memory, learning, and language. Some PDP models in cognitive neuropsychology provide a step in this direction (Farah, in preparation); but most assume that linguistic symbols are input at the lowest level, omitting the foundation of pre-linguistic categorization and perception-action coordination (Rosenfield, 1988). Brooks' systems (p. 28) are at a certain level mechanisms that coordinate behavior without intervening production and interpretation of 1st person symbolic descriptions. The subsumption architecture bears some relation to Figure 2, with each layer constraining the others, and compositional ordering, so higher-order networks are only active when the lower sensorimotor organizations they bias become active. Since the repertoire of sensorimotor coordinations is fixed and indeed pre-wired, Brooks' robots model the self-organization of the human brain, but in lacking the ability to recreate previous sequential coordinations, they do not replicate human learning or the plasticity of sensorimotor recombination.

The idea of "incrementally adding new control layers to simple systems

that already work" (p. 28) adopts an evolutionary view of engineering design,

espoused by both Braitenberg (1984) and Edelman (1992). The idea is to

understand what non-symbolic mechanisms could support higher-order capabilities.

This avoids the category error of building in higher-order capabilities

into the foundation from which they must be generated (Bickhard, in preparation).

The navigation, nest-building, hunting, and social relations of other animals

strongly suggests that linguistic, 1st person symbolizing is unnecessary

for learning such behaviors. In contrast, the symbolically-mediated view

states:

This stored computer program terminology of storage and transmission suggests that all perceiving and acting is mediated by language, in the sense that 1st person symbolic representations of the world are necessary to coordinate sensory and motor systems. The dialectic view is that symbolizing processes subsume and always involve pre-linguistic processes (Figure 2). Sensorimotor recoordination occurs automatically; indeed, for the animal running through a forest, navigation occurs as a matter of course without symbolizing (i.e., without language, whether verbal or pictorial). Note that conceptualizing ("categorizing parts of past global mappings according to modality, the presence or absence of movement, and the presence or absence of relationships between perceptual categorizations...a mapping of types of maps" (Edelman, 1992, p. 109)) is still pre-linguistic; that is, it is not constituted by syntactically ordered, conventional symbols like words or drawings (Lakoff, 1987). Rather than assuming that symbolic reasoning supplanted entirely pre-human emotional, reactive behavior, we ask instead how pre-linguistic mechanisms are further organized by additional neural processes--which both depend on and bias perceptual categorization, coordination, and conceptualization.

This view of neuropsychology is inspired by and seeks to explain a variety of well-known cognitive phenomena that symbolic theories inadequately explain or ignore:

--Regularities develop in human behavior without requiring awareness of the patterns, that is, without 1st person representations, such as grammars or strategy rules (Ryle, 1949);

--People speak idiomatically, in ways grammars indicate would be nonsensical (Collingwood, 1938);

--Both speech and writing require cycles of revision and rephrasing, rather than just outwardly expressing what has been prestated subconsciously (Tyler, 1978);

--We experience interest, a sense of similarity, and value before we create representations to rationalize what we see and feel (Dewey, 1986; Schön, 1978);

--Emotions provide an encompassing orientation for focusing interest and resolving an impasse (Bartlett, 1932);

--Figure/ground perceptual reorganization and action reorientation are rapid and apparently co-constructed (Freeman, 1991; Arbib, 1981);

--Linguistic concepts are grounded in perceptual resemblance and function in our activity, constrained by, but not restrictively defined by other concepts (Wittgenstein, 1958; Lakoff, 1987);

--Know-how is at first inarticulate and disrupted by reflection (Zuboff, 1988);

--Development involves stage effects, levels of representationality (Bickhard, in preparation);

--Remembering is aided by re-experiencing images and physical orientation (Bartlett, 1932);

--Every thought is a generalization (Vygotsky, 1934);

--Dysfunctions often involve inability to coordinate, not loss of modular, separable capabilities or knowledge (Sacks, 1987; Rosenfield, 1988);

--Immediate behavior is adapted, not merely selected from prepared possibilities (Bartlett, 1932);

--Modular specialization of the brain correlates with the dialectic relation between perceptual categorization and sequencing of behaviors (Freeman 1991; Edelman, 1992);

From this perspective, situated cognition reintegrates psychological theories of physical and cognitive skills, uniting emotions, reasoning, and development, in a neurobiologically-grounded way.

Articulating (symbolizing) one's behavior and beliefs provides a way

to reorient what would otherwise be automatic responses and automatic ways

of dealing with impasses. By putting things out into our environment (or

imagination), we can break the loop of merely reflexive action. Inquiry

is interactive, a coordinated process that goes on in our behavior over

time, as we reperceive, reshape, reinterpret, material forms by which we

model the world and our actions (Bamberger and Schön, 1983).

The combination of new juxtapositions in physical space, with the possibility

of new perceptual categorization, allows us to interactively, deliberately,

form new symbolizations, and hence new conceptualizations, new ways of

talking, new ways of seeing the world, new ways of coordinating our behavior.

Indeed, Bartlett says that this is the function of consciousness:

V&S say "the difference is not consequential [that in GPS]...the 'motor action' modified an internal problem representation rather than an external one" (p. 15). We can view manipulating a problem representation inside a computer as a motor action. But it is important to distinguish internal structure modifications within a perception-action coordination from reshaping and reperceiving a representation in a sequence of activity.

SA does not dispute that "a human needs symbolic reasoning to guide reactive processes" (p. 23). Quite the contrary: SA elevates the creation and use of representations to the primary object of study (e.g., see Suchman's study of people interpreting photocopier instructions). Reactive processes are strictly unguidable in themselves, but over a series of reflective reperceiving acts (themselves reactive), new organizations are composed. SA thus involves two kinds of learning: automatic recoordination (primary) and reflection involving representing (secondary).

Arguments about PDP vs. symbolic internal processors miss this crucial distinction. "A gradual shift from SA to more planful action" (p. 27) is the wrong dichotomy. We are always situated because that is how our brains work. We are situated in an empty dark room, we are situated in bed when dreaming. People doing the Tower of Hanoi problem are always situated agents, regardless of how they solve the problem. SA is a characterization of the mechanism, of our embodiment, not a problem-solving strategy (cf. [subjects' use of a generative rule] "can hardly be regarded as SA" (p. 27)).

The NAVLAB network's "source of symbolic knowledge to plan and execute a mission" must not be equated with either neural structures or a person interpreting plans (again, our three distinctions). First person interpretation of a representation (e.g., reading a map or plan) is inventive, not a process of retrieving definitions or rotely reciting meaning. Human interpretation (and hence use of symbols, plans, productions, etc.) is not ontologically bound (Winograd and Flores, 1986); comprehending isn't just manipulating symbolic categories. Every thought is a generalization, involving primary learning grounded in reconfiguration of pre-linguistic categorizations and coordinations4.

Following Dewey, SA views representations as tools to solve problems.

For example, a knowledge engineer creates and stores representations in

the knowledge base of an expert system. Beyond just conceptualizing--something

other animals can do--humans create linguistic structures, which objectify,

name, classify, order, and justify relationships. Reflection includes acts

of framing what we are doing, telling causal stories to link elements into

a scene, project future events, and plan our future actions (Schön,

1990). Again, symbolizing inherently involves acting:

We may draw, write, speak, gesture, or play music. Within a sequence, each "act" occurs automatically, as part of the process by which new perception-action sequences are coordinated. In the case of natural and graphic languages, the sequences of behavior produce compositions that include and exclude elements, chain parts of a story, express causal relationships, and model a situation. As such, symbolizing builds on and relies on our capability to automatically coordinate multiple concerns in our behavior: multiple modes of perception, multiple values and goals on different levels, and of course syntactic and semantic relations. A second order benefit is that our expressions, when placed in the external environment, can be preserved and reinterpreted later (e.g.. using a trip diary to plan another visit to the same location) and, especially, shared with other people (e.g., maps, journal articles, texts). In this sense, referring to a box of camping gear in the closet, or a list of what to take on the next trip, SA theorists say that knowledge is not strictly inside the head, but a coupling between adapted neurological processes and how we have structured our environment.

Representing (e.g., saying to myself what I will do next) recoordinates behavior, not via symbolic "instructions" (coded tokens passed serially downstream to other subsystems), but via the process of representing itself. That is, articulating is a process of physically recoordinating how we see and act. Behavior is mediated temporally and causally by such acts, in the sense that my speaking now affects what I do later. Contrary to the symbolic view, behavior is not mediated in the sense of a plan or recipe or rule that must be later read, related to my current activity, and translated into motor commands to be causally efficacious--it already has that effect because of my comprehending. Models involving "compiling" symbolic instructions into symbolic rules for later more-automatic processing are no better, for they still view learning as strictly linguistic manipulation.

Deliberating and using plans goes on in my activity. If time allows

and the occasion requires, I can reconstruct or retrieve a plan (from my

back pocket or memory) and reinterpret it, or remind myself of what I intended

to do, again a sequence of interactions. I read and follow plans in my

activity, not behind the scenes in hidden, subconscious deliberation:

Using the terms "plan," "symbol," "representation," and "encoding" to simultaneously refer to what a person does over time in sequences of coordinated activity, as well as to internal neurological processes, is confusing at best (witness V&S's difficulty of applying their meaning of "plan" to Suchman's analysis) and scientifically bankrupt at worst (assuming what needs to be explained).

The symbolic approach reduces comprehending a representation to a matching process because memory is viewed as a body of stored descriptions and programs: "The memory is an indexed encyclopedia" (p. 4) and "...the storage of such strategies" (p. 11). V&S clearly identify internal neural structures and processes with the form and use of external representations (e.g., an encyclopedia). Saying "internal representations have all the properties of symbol structures" (p. 36) is clearly wrong. First-person symbol structures, such as sentences on a page, can be rearranged, reperceived, and reinterpreted (plus stored and brought out unchanged, handed over to other people directly, overlaid by graphics and other notations). V&S confuse the carpenter with his tools. Ryle (1949, p. 292 ff.) spoke clearly about this category error, which presumes that the behavior we see outside (inferring, forming hypotheses, posting alternatives, translating between representations, comparing cases, etc.) must also be going on inside. (Of course, it can go on inside in another sense when the person is talking to himself.)

The purely symbolic view of comprehending has also obscured why expert systems work. Crucially, a human user of an expert system is not just providing a link to a data base, but reinterpreting what the queries mean in the current situation. Providing an interpretation for the rule representations, what they mean, is prone to change and is not recorded in the program. We can store examples (e.g., a pregnant woman is a compromised host), but even these strings must be interpreted (e.g., is a woman who missed a period necessarily pregnant?). The judgment a person provides interpreting MYCIN's prompts for data, as adapted conceptualization, is radically different in kind from the judgment MYCIN provides in syntactically interpreting its rules. The person is recoordinating perceptual and conceptual categorizations; MYCIN is only manipulating a symbolic calculus.

Again, the inadequacy lies in the conflated meaning of "symbol" in the physical symbol system hypothesis. V&S say, "We call patterns symbols when they can designate or denote" (p. 3). This doesn't make a distinction between a stand-in relational mapping (e.g., in sense of a name in a database designating a record on a remote file) and a person reconceptualizing when comprehending text (e.g., when MYCIN-team experts reinterpret what the symbols in a rule mean, with respect to how they fit new patient cases).

Brooks' insects and Pengi don't use 1st person representations; they don't have symbolic expressions like texts or knowledge base rules that they reconceptualize. V&S's claim about Brooks' insects that there is "every reason to regard these impulses and signals as symbols" is of course a 3rd person view. These robots use symbols as "stand ins," like the relation between letters and Morse code (Bickhard, in preparation)5. But in contrast with 3rd person symbols in most other programs, Agre's and Chapman's stand-ins represent whole perception-action coordinations. It is no coincidence that Dewey uses the same hyphenated notation to show a dialectic, sensorimotor relation. Dewey argues that the "reflex arc" is a circuit, a whole coordination that dialectically relates perception and action, indexically combining value categories with actions (e.g., "seeing-of-a-light-that-means-pain-when-contact-occurs" (Dewey, 1896, p. 138)).

"A person must carry out a complex encoding of the sensory stimuli that impinge on eye and ear" (p. 37) conflates stand-in perceptual categorization with encoding as a person's acts of modeling or sense-making, which requires sequences of coordinated perception-action activity (e.g., when a nurse classifies a patient coming into a clinic by checking off symptoms in a chart). Using the term "encoding" for pre-linguistic categorizing as well as for human problem solving (e.g., creating symbolic representations on a chart) conflates different kinds of representations, viewing not only what is inside as identical to what is outside, but losing the distinction of what different levels of representationality can do (Bickhard, in preparation).

It should now be clear that "SA is not supposed to require a representation of the real-world situation being acted upon" (p. 24) because the agent has no names for pre-linguistic neurological organizations; they cannot be strictly mapped onto names and rules. There is no body of behaviors that can be inventoried, no repertoire of mechanical coordinations. Each coordination is always a new composition, built by physical processes of activation, selection, and subsumption, but not ontologically grounded in some set of primitive objects, properties or relations in the world; indeed, the grounding is the other way around. Regularities develop, but without requiring us to represent them as rules or graphic networks or pictures. The obvious example is of a child learning to speak before being taught an abstract grammar.

The term "3rd person representation" is appropriate for describing a designer's relation to structures in a computer program. But are scientists likely to find stable neurological structures that can be given symbolic (linguistic) interpretation? Two hypotheses argue against a clear correspondence between the agent's words and neurological structures: Neurological structures are always new (not literally the same physical structure) and they are always activated as part of an ongoing coordination as circuits. But a reasonable hypothesis is that stable maps involving Broca's and Wernicke's areas, coordinating linguistic activity, may have 3rd person interpretation. Our 3rd person definitions of these "symbols" might then be stated in terms of relations between perception-value-action relations (i.e., a mapping of a mapping of types of maps), rather than isolated perceptual or conceptual categories.

5. Crucially, stand-ins may be expressed by the

scientist in a language, but they do not constitute a language to

the robot, in the sense that there is no conventional lexicon, no syntactic

ordering conveying roles of terms, and no meaning other than a formal,

relational mapping.

A robot built on Phoenix/NAVLAB principles may indeed be a useful tool for modeling and hence improve our understanding of multi-agent collaboration in uncertain environments. But we need to understand the residue: What must humans do in making sense of and adapting prescribed methods and policies that such models do not replicate? How can we account for development that steps outside of a given theory? In saying that the perceptual strategy model of the Tower of Hanoi problem solver "is clearly a symbolic information processing system, demonstrating that such a system can carry out situated action" (p. 26) V&S again equate a model with human capabilities6. But this program does not learn. Situated action inherently involves learning new coordinations in the course of every interaction; learning is not just a "second-order effect" (Newell and Simon, 1972; p. 7).

V&S state: "Arguing that the fire environment does not represent a legitimate testing ground for situated action would be analogous to suggesting that a pilot in a flight simulation is not acting situatedly because the experience is being artificially created" (p. 20). This is the wrong comparison. A flight simulator is not equivalent to a formal model of a flight simulator. The control panels and their physical arrangements are not described, but actual; the simulator can be interacted with directly by a person in an embodied way (by coordinating sensorimotor activity while sitting in a seat, orienting to multiple displays, and pushing buttons). By viewing the firefighter's world exclusively in terms of linguistic representations of objects and events, Phoenix research (Cohen, et al., 1989) effectively omits the pre-linguistic information and processes by which firefighters determine, for example, that a situation is problematic7. In particular, drawing analogies to interpret policy--and knowing that it must be reinterpreted--is grounded in previous sensorimotor experiences (Schön, 1979; Zuboff, 1988).

Chapman's remark that "a problem...can be solved once and for all" (p. 32) is discussed by Lave (1988). The idea is that once we have a problem formulation, a problem description and operators in some ontology, we have a logical puzzle. What remains is purely calculus: manipulating expressions according to the predefined rules, modifying rules by other predefined rules, etc. In contrast, problem formulation for people arises in their coordinated activity, as perceptual categorizations, and linguistic creations that are always at some level new (relative to symbolic models of what is happening). That is, problems in part arise because of discoordination, an inability to automatically recoordinate perceptual, conceptual, and symbolic categorizations. Studying intelligence by supplying 1st person problem formulations (as in the Tower of Hanoi) misses this underlying dialectic process (Schön, 1979), wrongly suggesting that action begins and proceeds only with linguistic descriptions (Reeke and Edelman, 1988; Rosenfield, 1988).

Pengo can "be solved once and for all" (p. 32) in the sense that we can represent the Pengo world and write a program to play it. Obviously, the game will be played within changing situations and the program will be more or less successful given its available resources. It is true that "a new theoretical paradigm" is not required to play a video game (p. 33). V&S miss the point that Pengo is a model, a tool, for helping us better articulate the nature of coordinated activity.

V&S believe that learning by EPAM, adaptive production systems, UNDERSTAND, etc. demonstrates flexibility and creativity equivalent to people: "Symbolic systems... continually revise their descriptions of the problem space and the alternatives available to them" (p. 7). But they do this in an ontologically closed space, with symbolic structures grounded only in other symbols. Any system with such a foundation will be limited relative to human creativity, regardless of how much is built in or how much it "learns." For example, AARON (McCormick, 1991) produces an infinite number of different drawings, but is categorically bound (i.e., its performance lies within prescribed classes and their relations, consisting of fewer than a dozen people, many trees, no crocodiles). Modifying the ontological structures after a program fails (Cohen rewrote AARON four times in the past decade) merely begs the issue of how such mechanisms will achieve human competence without their human programmers and critics to tend them.

Saying that people have knowledge that programs lack is circular, suggesting again (in the knowledge-acquisition-as-transfer mistake) that such linguistic, 1st person representations pre-exist before we create them. How do people create the representations they put into the program? By hypothesizing the structures inside and outside the head to be the same in kind, the symbolic approach is forced to reduce meaning to representations of meaning, speaking to what has already been predescribed inside, and hence problem formulation to searching stored or external representations8 (Tyler, 1978).

A corollary is that the symbolic approach inadequately construes how cognitive models are themselves created, and their relation to the phenomena we sought to understand. For example, in our sense making and inquiry, we classify a physician's behavior in medical diagnosis as an instance of gathering data about the patient (e.g., the question, "When did the fever begin?"). We try to find rules that can generate a sequence of such classified behaviors (e.g., we determine that the physician is following the strategy of asking follow-up questions). But we ignore the tone of voice, the physician's posture, the wave of the hands, the glance over to the left corner of the room. Whenever we suppose that there are acts at all, as discrete units, we are adopting the eyeglasses of a functional analysis, which breaks behavior into phases of thinking and doing, stimulus and response, gathering information and making conclusions. In merely saying what the act is, we have classified. Abstracting the behavior, we are no longer dealing with neurological coordination processes themselves, but their product (indeed, a mixture of the agent's and the observer's 1st person representations: words, plans, rules, and discourse patterns). Dewey tells us that this is an old philosophical mistake: "They treated a use, function, and service rendered in conduct of inquiry as if it had ontological reference apart from inquiry" (Dewey, 1896, p. 320).

Again, we have a recursive problem: We fail to see the inadequacy of our models of problem solving because we judge their veracity in terms of our models of problem formulation. To break out, to form a scientific theory of cognition that would enable us to build an intelligent machine, we must move to the social and neurological levels. This requires explaining how representation systems evolved in living systems and develop in each child from a non-symbolic foundation (Edelman, 1992).

7. If the flight simulator uses simulated images of runways, it is similarly ontologically bound; models and the world can be integrated for varying degrees of freedom.

8. Bickhard (in preparation) asserts that

encodingism is the asymptotic limit of interactivism (SA). That is, within

a stable environment, a symbolic calculator can be supplied with an appropriate

starting ontology and incrementally modified to approach human capability.

|

|

|

| 1) Social anthropology, sociolinguistics: Social

view of everyday practice.

Distinction between practice and theory; culture is not reducible to a list of beliefs, conventions, or general rules. |

4) Neuropsychology: More detailed understanding

of cognitive processes in the brain.

Distinction between deliberation and coordination.

|

| Shift from viewing all behavior as generated from internally

pre-stored facts and rules to inquiring about theories people actually

do create and use. What is the role of a theory? What is the nature of

skills, habits, & improvisation? Contrast with calculus: representation

manipulation by prestated rules and procedures (e.g., subtraction, expert

system inference, syntactic machine learning).

|

How is complex, multi-modal sensorimotor coordination possible

without symbolic instruction or reflection that describes patterns and

reasons about alternative actions?

|

| 2) Situated cognition: More detailed understanding

of how individuals learn, how representations are created and used.

Distinction between representations we can perceive, representing to ourselves, and neural structures. |

3) Philosophy: Foundational analyses of concepts,

meaning, and models.

Distinction between models and knowledge.

|

| What is the role of plans, models, maps? Why need a map

and what do with it in your behavior (i.e., how actually use it)?

|

How do we act without having a theory (i.e., without manipulating representations and plans)? How do we "find our way about" (Wittgenstein, 1958)? How is familiarity embodied? (Dreyfus and Dreyfus, 1986) |

The social view accepts the representational focus of cognitive science

and AI. But applying the ethnomethodological stance (Berger and Luckman,

1967), rather than imputing representations as being hidden and manipulated

in the head of agents, the social view looks to see how people create,

modify, and use representations (e.g., slides for a talk, architectural

drawings, texts, expert systems) in their visible behavior.

Thus, we have a fresh look at where representations come from, how they are learned and what they do for us (Bamberger, 1991; Schön, 1987; Suchman, 1987; Wenger, in press).

Unfortunately, this difficult attempt to bridge social and cognitive views has caused an number of misunderstandings. For example, V&S believe that the cottage cheese anecdote is meant to illustrate that "knowledge about interaction with real-world objects is not symbolically represented" (p. 18). Instead, the example demonstrates that inquiry is a complex coupling of physical materials, sensorimotor coordinations (including pre-linguistic ways of seeing), and 1st person representation and manipulation of constraints. Pedagogically, the cottage cheese story celebrates inventiveness and the importance of teaching representational conventions without destroying creativity, without leading kids to believe there is only one way to think, only one "correct" way of describing facts and working problems (Brown, et al., 1988).

The repeating theme is the relation of formal models to skills (know-how, unarticulated coordinations). V&S suggest that it doesn't matter what we call this problem, saying that Lave has merely "reformulated" it in different terms. Ironically, Lave, Schön and others are calling attention to just this, the process of problem formulation, the effect of initial metaphors on the perceived problem space, how in fact naming and problem framing occurs. That is, the question at hand is "how shall we frame the problem of problem framing?" Labeling the learning problem as "transfer" ignores how framing a problem constrains a space of solutions (e.g., like calling people "homeless") (Schön, 1979). Saying that transfer is "frequently addressed in the learning literature" (p. 18) does not respond to Lave's concern that cognitive theories of learning in the literature are inadequate. The "capture and transfer" view of learning10 suggests that all knowledge can be written down in symbolic models, that what people know can be inventoried (as facts and generative rules), that a symbolic program could be equivalent to a human being's intelligence. Lave (1988) rejects these assumptions. She grapples with the issue of what constitutes cultural knowledge and how it is reproduced and adapted without exhaustive 1st person representation of its content.

V&S's phrase "so long as a system can be provided with a knowledge

base" (p. 38) suggests that social knowledge can be inventoried, that we

share representations of how to act (e.g., descriptions of conventions,

grammars, scripts, belief networks). On the contrary, SA argues that our

ability to coordinate our activity by gesturing, orienting our bodies,

mimicking sounds and postures, etc., interactively, in our behavior, couples

our perception and action, providing the foundation for speaking a common

language, constructing shared causal models, deliberating and planning

complex activities (Lave, 1988). Again, 1st person representations in our

statements and formal models, exemplified by knowledge bases of expert

systems, are not the substrate of intelligent behavior. Such social constructions

are only possible because we have similar pre-linguistic experiences, including

similar coordinations of values, perceptual categories, and behaviors (Edelman,

1992). Lave's phrase, "transformational relations which are part of 'intentionless

but knowledgeable inventions'," (p. 17) refers to the underlying pre-linguistic,

adaptive coordinating and categorizing, what we commonly call "know-how."

And indeed, at a higher level, the relation between increasing neural and

social organizations is dialectic. In a group we are mutually constraining

each other's perception and sequencing, so our capabilities to interact

are both developed within and manifestations of social, multi-agent interactions.

Because the regularities in these interactions (e.g., turn taking) are

not based on representations of the patterns themselves, the system of

multiple situated agents constitutes a higher-order, self-organizing system:

If we desire to explain or understand the mental aspect of any biological event, we must take into account the system--that is, the network of closed circuits within which that biological event is determined. But when we seek to explain the behavior of a man or any other organism, this 'system' will usually not have the same limits as the 'self'--as this term is commonly (and variously) understood...mind is immanent in the larger system, man plus environment. (Bateson, 1972, p. 307)

Table 2 summarizes most of the distinctions I have drawn here.

|

|

|

|

| memory

|

stored rules or schema structures in a representation language

|

neural nets reactivated and recomposed in-line via selection; not a place or body of descriptions of how the world or agent's behavior appears. |

| representation

|

meaningful forms internally manipulated subconsciously

|

created and interpreted in our activity (1st person);

external representations _ representing to self _ neural structures |

| internal processes

|

modularly independent; can perceive and reason without

acting

|

co-determined, dialectic; always adapted (generalized from past coordinations), inherently chronological |

| immediate behavior

|

selected from prepared possibilities ("pre-existing actions")

|

adapted, composed, coordinated, always new; always a sensorimotor circuit |

| reasoning

|

supplants immediate behavior; goes on subconsciously | occurs in sequences of behavior over time

|

| speaking

|

meaning of the utterance is represented before speaking

occurs

|

speaking and conceiving occur dialectically; representing meaning occurs as later commentary behavior |

| learning

|

secondary effect (chunking)

|

primary learning is always occurring with every thought,

perception, and action; chunking occurs as categorization of sequences;

secondary (reflective) learning occurs in sequences of behavior over time; requires perception. |

| knowledge representation

(cognitive model) |

corresponds to physical structures stored in human's brain

|

a model of some system in the world and operators for manipulating the model; abstracts agent's behavior, explaining interaction in some environment over time. |

| concepts

|

labeled structures, corresponding to linguistic terms,

with associated descriptions of properties and relations to other concepts,

i.e., meanings are symbolically represented and stored

|

pre-linguistic categorizations of perceptual categorizations;

ways of coordinating perception and action;

has no inherent formal structure; cannot be inventoried; meaning and perception are inseparable. |

| analogy

|

feature mapping of concept representations

|

process of perceiving and acting by recomposing previous coordinations (e.g., "seeing as") |

10. Exemplified by my dissertation, "The transfer of rule-based expertise in a tutorial dialogue."

V&S's view of SA is that "planning and representation, central to symbolic theories, are claimed to be irrelevant in everyday human activity." Quite the contrary, Suchman is explaining how plans are used in everyday activity. V&S state that "Suchman takes the rather extreme position that plans play a role before and after action, but only minimally during it" (p. 10). This reveals their view that deliberation, referring to stored internal plans, is required for every action. Newell's (1990, p. 135) use of the term "elementary deliberation" for automatic behavior shows the same bias. When Suchman says, "Plans as such neither determine the course of situated action nor adequately reconstruct it" (p. 11), she means that interpretation of plans (reading and comprehending a plan or creating a new one) goes on in our behavior as coordinated sequences of perceiving and acting, not by internally linking peripheral perceptual and motor systems.

By such a neuropsychological interpretation, we find that SA is not making obviously wrong statements, but rather profound, and at first difficult to understand, claims about cognition--essentially turning symbolic models inside out: Every act of deliberation occurs as an immediate behavior. That is, every act of speaking, every motion of the pen, each gesture, turn of head, or any idea at all is produced by the cognitive architecture as a matter of course, as a new neurological coordination. The contrast is not between immediate behavior and deliberate behavior (cf. Newell, 1990, p. 136). The contrast is between 1) dialectic coupling of sensorimotor systems in an on-going sequence of coordinations and 2) perceived sequences of behaviors, which we name, classify and rationalize.

For example, the diagnostic strategies of NEOMYCIN (Clancey, 1988) abstractly describe the sequence of physician requests for patient data in terms of goals, shifting attention, and the developing form of the model of the patient. If a physician refers to such a plan, it is in his or her activity, not in a hidden way, but as a person generating ideas and reflecting on them (Ryle, 1949). NEOMYCIN is inadequate as a cognitive model in the sense that it doesn't distinguish between 1st and 3rd person representations, between the gear-like mapping of relational models and the adaptive interpretation involved in using NEOMYCIN's rules in problematic situations.

Deliberating, what Dewey (1938) called "inquiry," occurs in the course of our behavior, in the relation of our shifts of attention, representational acts, and reshaping of materials around us--not between perceiving and acting, but through it, over time, in cycles of reperceiving, reshaping, and reenacting what we have said and done before. This remaking involves both internal recomposing and recoordinating of perception-action sequences, as well as physical manipulation of materials in our environment (e.g., rewriting a paragraph). In this sense, Bamberger and Schön (1983), following Dewey, view sense-making as integral with a process of making things, which puts primacy on observing what people do. Compare the sterile representation of disks and pegs in the Tower of Hanoi program to a journal article, an architectural drawing, or blocks arranged to describe a melody (Bamberger, 1991). How do people create such representations? How do interpretations and uses change within changing activities and values? (Schön, 1987)

The physical symbol system hypothesis, which equates symbols inside and out, is bolstered by the idea that comprehension of text or interpretation of a plan or graphic involves only reconfiguring representations (e.g., dictionary definitions, grammars, scripts). Similarly, speaking is viewed as a process of pre-representing inside what you intend to say, as well as its grammatical form and meaning. The implied claim of SA is that when today's computer programs read and "comprehend" text, they do so only in a mechanical, rote-like way, bound by symbolic structures and calculi.

V&S say that "the symbolic approach has never disagreed...that there is more to understanding behavior than describing internally generated symbolic goal-directed planning." Simon may have had a broader view, but this is a poor summary of the assumptions driving student modeling in intelligent tutoring systems, knowledge acquisition in expert systems, or the CYC project (Smith, 1991). As I have shown, SA is critically at odds with V&S's beliefs: Human knowledge is not equivalent to a body of knowledge representations. Symbolizing and comprehending are processes that occur in human behavior, not as internal linkages between perception and action. Learning is a primary phenomenon.

To make progress, to evaluate and refine the emerging neuropsychological model of SA (Figure 2), we should consider the broader analyses of the social sciences and philosophy. Sacks (1987), for example, shows how an ethnographic approach provides a broader view of how cognition develops within and is manifest in everyday life. As a heuristic for research, we must re-examine structures and processes in our cognitive models and clearly state which correspond to internal 3rd person representations; which to representing to oneself (without creating structures that are perceived); and which to 1st person representations in the world (which are reshaped and perceived in cycles of activity). SA research strongly suggests that philosophical arguments, such as Ryle's (1949) and Wittgenstein's (1958) critique of rule-based models of cognition, are understandable and provide relevant heuristic guidance (Tyler, 1978; Dreyfus and Dreyfus, 1986; Lakoff, 1987; Edelman, 1992; Slezak, in preparation).

SA doesn't require "a whole new language," (p. 42) but it does require that we watch how we use our words, particularly, "memory," "knowledge," "information," "symbol," "representation," and "plan." SA does suggest a different research agenda, as is amply demonstrated by the work of all the people cited by V&S, who either are studying human behavior in a new way (Lave, 1988; Suchman, 1987), using models in a new way in the classroom (Bamberger, 1991; Schön, 1987), inventing new robot designs (Maes, 1990), using computers in new ways in business (Kukla, et al., 1990), and developing new models of the brain (Iran-Nejad, 1987; Edelman, Freeman, Rosenfield, Sacks). Certainly, it isn't necessary (or perhaps possible) to "break completely from traditional theories" but instead to reconsider the relation of our models to the cognitive phenomena we sought to understand. Symbolic models, as tools, will always be with us. Yet, already the shift has begun from viewing them as intelligent beings, to but the shadow of what we must explain.

Alexander, C. 1979. A Timeless Way of Building. New York: Oxford University Press.

Arbib, M.A. 1981. Visuomotor coordination: From neural nets to schema theory. Cognition and Brain Theory, IV(1),: 23-40.

Bamberger, J. and Schön, D.A. 1983. Learning as reflective conversation with materials: Notes from work in progress. Art Education, March, pp. 68-73.

Bamberger, J. 1991. The mind behind the musical ear. Cambridge, MA: Harvard University Press.

Bartlett, F. C. [1932] 1977. Remembering-A Study in Experimental and Social Psychology. Cambridge: Cambridge University Press. Reprint.

Berger P.L and Luckmann, T. 1967. The Social Construction of Reality: A Treatise in the Sociology of Knowledge. Garden City, NY: Anchor Books.

Bateson, G. 1972. Steps to an Ecology of Mind. New York: Ballentine Books.

Bickhard, M. H. (in preparation). Levels of representationality. Presented at the McDonnell Foundation Conference on The Science of Cognition, Sante Fe.

Bickhard, M. H. and Richie, D.M. 1983. On the Nature of Representation: A Case Study of James Gibson's Theory of Perception. New York: Praeger Publishers.

Bickhard, M. H. and Terveen, L. (in preparation). The Impasse of Artificial Intelligence and Cognitive Science.

Braitenberg, V. 1984. Vehicles: Experiments in Synthetic Psychology. Cambridge: The MIT Press.

Brooks, R.A. 1991. How to build complete creatures rather than isolated cognitive simulators. In K. vanLehn (ed), Architectures for Intelligence: The Twenty-Second Carnegie Symposium on Cognition, Hillsdale: Lawrence Erlbaum Associates, pp. 225-240.

Brown, J. S., Collins, A., and Duguid, P. 1988. Situated cognition and the culture of learning. IRL Report No. 88-0008. Shorter version appears in Educational Researcher, 18(1), February, 1989.

Clancey, W.J. 1987. Review of Winograd and Flores' "Understanding Computers and Cognition": A favorable Interpretation. Artificial Intelligence, 31(2), 232-250.

Clancey, W.J. 1988. Acquiring, representing, and evaluating a competence model of diagnosis. In M.T.H. Chi, R. Glaser, and M.J. Farr (editors), The Nature of Expertise. Hillsdale: Lawrence Erlbaum Associates, pp. 343-418.

Clancey, W.J. 1989. The knowledge level reconsidered: Modeling how systems interact. Machine Learning, 4(3/4)285-292.

Clancey, W.J. 1991a. Review of Rosenfield's The Invention of Memory. The Journal of Artificial Intelligence, 50(2), 241-284.

Clancey, W.J. 1991b. The frame of reference problem in the design of intelligent machines. In K. vanLehn (ed), Architectures for Intelligence: The Twenty-Second Carnegie Symposium on Cognition, Hillsdale: Lawrence Erlbaum Associates, pp. 357-424.

Clancey, W.J. 1992a. Model construction operators. Artificial Intelligence, 53(1):1-115.

Clancey, W.J. 1992b. Representations of knowing: In defense of cognitive apprenticeship. Journal of AI and Education, 3(2)139-168.

Clancey, W.J. (in preparation b). Guidon-Manage revisited: A socio-technical systems approach. Submitted to the Journal of AI and Education.

Clancey, W. J. (in preparation c). 'Situated' means coordinating without deliberation. Presented at the McDonnell Foundation Conference on The Science of Cognition, Sante Fe.

Cohen, P. R., Greenberg, M.L., Hart, D.M., and Howe, A.E. 1989. Trial by fire: Understanding the design requirements for agents in complex environments. The AI Magazine 10(3):34-48.

Collingwood, R. G. 1938. The Principles of Art, London: Oxford University Press.

Dewey, J. [1896] 1981. The reflex arc concept in psychology. Psychological Review, III:357-70, July. Reprinted in J.J. McDermott (ed), The Philosophy of John Dewey, Chicago: University of Chicago Press, pp. 136-148.

Dewey, J. 1938. Logic: The Theory of Inquiry. New York: Henry Holt & Company.

Dewey, J. [1938b] 1981. The criteria of experience. In Experience and Education, New York: Macmillan Company, pp. 23-52. Reprinted in J.J. McDermott (ed), The Philosophy of John Dewey, Chicago: University of Chicago Press, pp. 511-523.

Dewey, J. and Bentley, A.F. 1949, Knowing and the Known. Boston: The Beacon Press.

Dreyfus, H.L and Dreyfus, S.E. 1986. Mind Over Machine. New York: The Free Press.

Edelman, G.M. 1987. Neural Darwinism: The Theory of Neuronal Group Selection. New York: Basic Books.

Edelman, G.M. 1992. Bright Air, Brilliant Fire: On the Matter of the Mind. New York: Basic Books.

Farah, M.J. (in preparation.) Cognitive neuropsychology without the "transparency assumption." Presented at the McDonnell Foundation Conference on The Science of Cognition, Sante Fe.

Freeman, W. J. 1991. The Physiology of Perception. Scientific American, (February), 78-85.

Gleick, J. 1987. Chaos: Making a New Science. New York: Viking.

Greenbaum J. and Kyng, M. 1991. Design at Work: Cooperative design of computer systems. Hillsdale, NJ: Lawrence Erlbaum Associates.

Gregory, B. 1988. Inventing Reality: Physics as Language . New York: John Wiley & Sons, Inc.

Iran-Nejad, A. 1984. Affect: A functional perspective. Mind and Behavior, 5(3) 279-310, Summer.

Iran-Nejad, A. 1987. The schema: A long-term memory structure or a transient functional pattern. In R. J. Tierney, Anders, P.L., and J.N. Mitchell (editors), Understanding Readers' Understanding: Theory and Practice, (Hillsdale, Lawrence Erlbaum Associates), pp. 109-127.

Kukla, C.D., Clemens, E.A., Morse, R.S., and Cash, D. 1990. An approach to designing effective manufacturing systems. To appear in Technology and the Future of Work.

Laird, J.E. and Rosenbloom, P.S. 1990. Integrating Execution, Planning, and Learning in SOAR for External Environments. Proceedings of the Eighth National Conference on Artificial Intelligence. Menlo Park, CA: The AAAI Press. pps. 1022-1029.

Lakoff, G. 1987. Women, Fire, and Dangerous Things: What Categories Reveal about the Mind. Chicago: University of Chicago Press.

Lave, J. 1988. Cognition in Practice. Cambridge: Cambridge University Press.

Lewis, R. et al. 1990. Soar as a unified theory of cognition: Spring 1990 Symposium. Proceedings of the Twelfth Annual Conference of the Cognitive Science Society. Hillsdale, NJ: Lawrence Erlbaum Associates, pp. 1035-1042.

Maes, P. 1990. Designing Autonomous Agents, Guest Editor. Robotics and Autonomous Systems 6(1,2) 1-196.

McCormick, P. 1991. AARON's Code: Meta-Art, Artificial Intelligence, and the Work of Harold Cohen. New York: W.H. Freeman and Company.

Newell, A. 1980. Physical symbol systems. Cognitive Science, 4(2):135-183.

Newell, A. 1990. Unified Theories of Cognition. Cambridge, MA: Harvard University Press.

Newell, A. and Simon, H.A. 1972. Human Problem Solving. Engelwood Cliff, NJ: Prentice-Hall, Inc.

Nonaka, I. 1991. The Knowledge-Creating Company. Harvard Business Review. November-December. 96-104.

Reeke, G.N. and Edelman, G.M. 1988. Real brains and artificial intelligence. Daedalus, 117 (1) Winter, "Artificial Intelligence" issue.

Roschelle, J, and Clancey, W. J. (in preparation) Learning as Social and Neural. To appear in Educational Psychologist.

Rosenfield, I. 1988. The Invention of Memory: A New View of the Brain. New York: Basic Books.

Rosenschein, S.J. 1985. Formal theories of knowledge in AI and robotics. SRI Technical Note 362.

Ryle, G. 1949. The Concept of Mind. New York: Barnes & Noble, Inc.

Sacks, O. 1987. The Man Who Mistook His Wife for a Hat. New York: Harper & Row.

Schön, D.A. 1979. Generative metaphor: A perspective on problem-setting in social policy. In A. Ortony (Ed), Metaphor and Thought. Cambridge: Cambridge University Press. 254-283.

Schön, D.A. 1987. Educating the Reflective Practitioner. San Francisco: Jossey-Bass Publishers.

Schön, D.A. 1990. The theory of inquiry: Dewey's legacy to education. Presented at the Annual Meeting of the American Educational Association, Boston.

Slezak, P. (in preparation) Situated cognition: Minds in machines or friendly photocopiers? Presented at the McDonnell Foundation Conference on The Science of Cognition, Sante Fe.

Smith, B.C. 1991. The owl and the electric encyclopedia. Artificial Intelligence 47(1-3):251-288.

Smoliar, S.W. 1989. Review of Neural Darwinism: The Theory of Neuronal Group Selection, by G.M Edelman. Artificial Intelligence 39(1), 121-136.

Suchman, L.A. 1987. Plans and Situated Actions: The Problem of Human-Machine Communication. Cambridge: Cambridge Press.

Tyler, S. 1978. The Said and the Unsaid: Mind, Meaning, and Culture. New York: Academic Press.

Vera, A.H. and Simon, H.A. (in preparation) Situated action: A symbolic interpretation. To appear in Cognitive Science.

Vygotsky, L. [1934] 1986. Thought and Language. Cambridge: The MIT Press. Edited by A. Kozulin.

Wenger, E. (in press) Toward a Theory of Cultural Transparency. Cambridge: Cambridge Press.

Winograd, T. and Flores, F. 1986. Understanding Computers and Cognition: A New Foundation for Design. Norwood: Ablex.

Wittgenstein, L. 1958. Philosophical Investigations. New York: Macmillan Company.

Wynn, E. 1991. Taking Practice Seriously. In J. Greenbaum and M. Kyng (eds), Design at Work: Cooperative design of computer systems. Hillsdale, NJ: Lawrence Erlbaum Associates, pp. 45-64.

Zuboff, S. 1988. In the Age of the Smart Machine: The Future of Work and Power. New York: Basic Books.