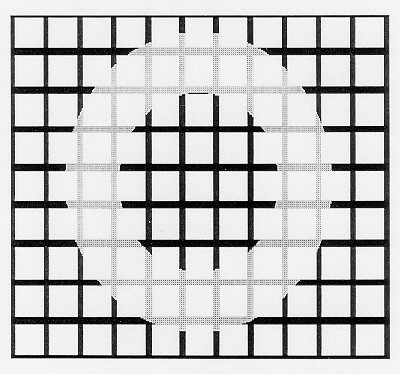

Figure 1 has information about shapes, but no information about colors at all.

Figure 2

Figure 2

One of the things you learn if you read books and articles in (or about) cognitive science is that the brain does a lot of "filling in"--not filling in, but "filling in"--in scare quotes. My claim today will be that this way of talking is not a safe bit of shorthand, or an innocent bit of temporizing, but a source of deep confusion and error. The phenomena described in terms of "filling in" are real, surprising, and theoretically important, but it is a mistake to conceive of them as instances of something being filled in, for that vivid phrase always suggests too much--sometimes a little too much, but often a lot too much. Here are some examples (my boldface throughout).

Many experiments have demonstrated the existence of apparent motion, or the phi phenomenon. If two or more small spots separated by as much as 4 degrees of visual angle are briefly lit in rapid succession, a single spot will seem to move. Nelson Goodman once asked Paul Kolers whether the phi phenomenon persisted if the two illuminated spots were different in color, and if so, what happened to the color of "the" spot as "it" moved? Would the illusion of motion disappear, to be replaced by two separately flashing spots? Would the illusory "moving" spot gradually change from one color to another, tracing a trajectory through the color solid? The answer, when Kolers and von Grünau (1976) performed the experiments, was striking: the spot seems to begin moving and then change color abruptly in the middle of its illusory passage toward the second location.

Goodman describes the phenomenon this way:

. . .each of the intervening places along a path between the two flashes is filled in . . . with one of the flashed colors rather than with successive intermediate colors." (Goodman, 1978, p.85)This seems to raise metaphysically vertiginous problems about time, since, as Goodman wonders,

how are we able . . .to fill in the spot at the intervening place-times along a path running from the first to the second flash before that second flash occurs? "(Goodman, 1978, p.73)How indeed is this possible? Sir John Eccles goes so far as to say that in order to explain such effects we must admit that "the self-conscious mind . . . plays tricks with time" (1977, p.364)--real Twilight Zone stuff--backwards causation and all that.

That's not really so bad, though, according to Benjamin Libet, for we already have the uncontroversial precedent of spatial filling in:

there is experimental evidence for the view that the subjective or mental "sphere" could indeed "fill in" spatial and temporal gaps. (Libet, 1981, p.196.)Libet suggests an example:

. . . the neurologically well-known phenomenon of subjective "filling in" of the missing portion of a blind area in the visual field. (Libet, 1985 p.567)This is not just neurologically well-known; almost everybody knows that the blind spot in each eye is "filled in" by the brain. There is also auditory "filling in." When we listen to speech, gaps in the acoustic signal can be "filled in"--for instance, in the "phoneme restoration effect." (Warren, 1970) Ray Jackendoff puts it this way:

Consider, for example, speech perception with noisy or defective input--say, in the presence of an operating jet airplane or over a bad telephone connection. . . . What one constructs . . . is not just an intended meaning but a phonological structure as well: one "hears" more than the signal actually conveys. . . . In other words, phonetic information is "filled in" from higher-level structures as well as from the acoustic signal; and though there is a difference in how it is derived, there is no qualitative difference in the completed structure itself. (Jackendoff, 1989, p. 99)And when we read text, something similar (but visual) occurs: As Bernard Baars puts it:

We find similar phenomena in the well-known "proofreader effect," the general finding that spelling errors in page proofs are difficult to detect because the mind "fills in" the correct information. (Baars, 1988, p.173)Howard Margolis, in Patterns, Thinking and Cognition, adds an uncontroverial commentary on the whole business of "filling in":

The "filled-in" details are ordinarily correct. (Margolis, 1987, p.41)These authors are not alone. Who among us hasn't felt the temptation to speak of the brain "filling in" one gap or another? --And many have succumbed to the temptation in print. Some writers use scare-quotes, some use italics, and some use the term neat, without apology or warning. But even those who wield the phrase without a warning label (such as Nelson Goodman, above) often make it clear by other stylistic devices that they realize that there is something faintly misleading or in-need-of-further-elaboration, or maybe just plain wrong, about this way of speaking. They suggest that it is, at best, then, a useful shorthand. I think it is worse than that.

The tacit recognition that there is something fishy about the idea of "filling in" if taken dead literally, is wonderfully manifested in this passage from C. L. Hardin's book, Color for Philosophers:

Consider the receptorless optic disk, or "blind spot," formed where the bundle of optic fibers leaves the retina for the brain. We recall that this area is only 16 degrees removed from the center of vision. It covers an area with a 6 degree visual diameter, enough to hold the images of ten full moons placed end to end, and yet there is no hole in the corresponding region of the visual field. This is because the eye-brain fills in with whatever is seen in the adjoining regions. If that is blue, it fills in blue; if it is plaid, we are aware of no discontinuity in the expanse of plaid. (Hardin 1988, p.22)Hardin just can't bring himself to say that the brain fills in the plaid, for this suggests, surely, quite a sophisticated bit of "construction," like the fancy "invisible mending" you can pay good money for to fill in the hole in your herringbone jacket: all the lines line up, and all the shades of color match across the boundary between old and new. It seems that filling in blue is one thing--all it would take is a swipe or two with a cerebral paintbrush loaded with the right color; but filling in plaid is something else, and it is more than he can bring himself to assert.

But as Hardin's comment reminds us, we are just as oblivious of our blind spots when confronting a field of plaid as when confronting a uniformly colored expanse, so whatever it takes to create that oblivion can as readily be accomplished by the brain in either case. "We are aware of no discontinuity," as he says. But if the brain doesn't have to fill in the gap with plaid, why should it bother filling in the gap with blue?

In neither case, presumably, is "filling in" a matter of literally filling in--of the sort that would require something like paintbrushes. We are all pretty sophisticated here, and none of us, surely, thinks that "filling in" is a matter of the brain's actually going to the trouble of covering some spatial expanse with pigment (or some temporal expanse with acoustical vibrations). We all know that the real, upside-down image on the retina is the last stage of vision at which there is anything colored--in the unproblematic ways that reflective physical surfaces are colored. Since there is no literal mind's eye, there is no use for pigment in the brain.

So much for pigment. But still, we may be inclined to think that there is something that happens in the brain that is in some important way analogous to covering an area with pigment--otherwise we wouldn't want to talk of "filling in" at all. As Jackendoff says, speaking of the auditory case, "one 'hears' more than the actual signal conveys"--but note that still he puts "hears" in scare-quotes. What could it be that is present when one "hears" sounds filling silent times or "sees" colors spanning empty spaces? It does seem that something is there in these cases, something the brain has to provide (by "filling in"). What should we call this unknown whatever-it-is? I propose that we call it figment. The temptation, then, is to suppose that there is something, made out of figment, which is there when the brain "fills in" and not there when it doesn't bother "filling in".

Put so baldly, this hunch will inspire few if any converts. The whole idea of figment looks ridiculous (if I have done my job well). It is ridiculous. There is no such stuff as figment. The brain doesn't make figment; the brain doesn't use figment to fill in the gaps; figment is just a figment of my imagination. And I suppose that you are all inclined to agree. Down with figment! But then, what does "filling in" mean, what could it mean, if it doesn't mean filling in with figment? To put it bluntly, if there is no such medium as figment, how does "filling in" differ from not bothering to fill in?

It will help to find a sane view if we consider two different ways in which the following representation might be filled in:

Figure 1 has information about shapes, but no information about colors at all.

Figure 2

Figure 2

Figure 2 has information about colors as well, in the form of a numbered code. For instance, you can tell that two different regions are the same color by determining that they have the same number assigned to them--the sort of discrimination job a computer is good at. If one were to take colored crayons or pens and fill in the regions with the indicated colors, one would have another way in which color information could be filled in--with real color, real pigment. An obvious question is: are the regions in figure 2 "filled in" or not? In one sense, they are, since any procedure that needs to be informed about the color of a region can, by mechanical inspection of that region, extract that information. This is purely informational filling-in, and the system is entirely arbitrary, of course. We can readily construct indefinitely many functionally equivalent systems of representation--involving different coding systems, or different media. Computer graphics systems such as CAD systems, for instance, represent the colors of regions in such a fashion. The regions are represented as ordered n-tuples of points in a virtual three-space ("connect the dots" in effect).

If you make a colored picture on your PC using PC-Paintbrush, when you store the picture on a disk, a compression algorithm does something similar: it divides the area into like-colored regions, and stores the region boundaries and their color number, in an "archive" file rather than storing the color value at each pixel, in a bit-map.

A bit-map, as you can see, is yet another form of color-by-numbers, but by explicitly labeling each pixel, it is a form of roughly continuous representation--the roughness a function of the resolution of the pixels. A third way of storing the image on your computer screen would be to take a color photograph, and store the image on, say, a 35mm slide.

These are importantly different representational systems. In the case of the 35mm slide, there is actual dye, literally filling in a region of real space. This, like the bit-map, is a continuous representation of the depicted spatial regions (continuous down to the grain of the film, of course). But unlike the bit-map, color is used to represent color. (A color negative also uses color to represent color, but in an inverted mapping.) Videotape is another medium of roughly continuous representation, but what it stores on the tape is not literally images, but recipes for forming images. A bit-map is also not literally an image, but just an array of values, another sort of recipe for forming an image. An archive file storing the results of a compression algorithm is still another recipe for forming an image, and it can be just as accurate, but it does this by generalizing over regions, labeling each region only once.

Here, then, are three ways of "filling in" color information: color-by-colors, color-by-bit-map, and color-by-numbers. Color-by-numbers is in one regard a way of "filling in" color information, but it achieves its efficiency, compared to the others, precisely because it does not bother filling in values explicitly for each pixel.

Computers can code for colors with numbers in registers (in fact, they can't code anything with anything else!), and no one thinks that the brain uses numbers in registers to code for colors. But that is a red herring; numbers in registers can be understood just to stand for any system of magnitudes, any system of vectors, that a brain might employ as a "code" for colors; it might be neural firing frequencies, or some system of addresses or locations in neural networks, or . . . any system of physical variations you like. Numbers in registers have the nice property of preserving relationships between physical magnitudes, while remaining neutral about any "intrinsic" properties of such magnitudes. Although numbers can be used in an entirely arbitrary way to code for colors, they can also be used in non-arbitrary ways, to reflect the structural relations between colors that have been discovered. The "color solid" is a logical space ideally suited to a numerical treatment--any numerical treatment that reflects the betweenness relations, the oppositional and complementary relations, and so on. Certainly the more we learn about how the brain codes colors, the more powerful a numerical model of human color vision we will be able to devise.

The trouble with speaking of the brain "coding" for colors by using intensities or magnitudes of one thing or other is that it suggests to the unwary that eventually these codings have to be decoded, getting us "back to color." (As if the brain might store color information about a parrot once seen in the form shown in figure 2, but then arrange to have the representation "decoded" into "real colors" on special occasions.)

No one takes that idea seriously, of course. Everyone recognizes that

I introduced the idea of literally filling in the color-by-numbers

parrot with pigment as a sort of joke, to remind you of a sort of limiting

case view that nobody takes seriously. But let's just make sure.

Consider the phenomenon of neon color spreading (van Tuijl, 1975).

Figure 4

If the gray lines in the figure were bright red, you would see a pink glow filling in the region of the ring, following the subjective contours. The pink you would see would not be a result of pink smudging of the figure or light scattering. The pink glow you would see would be an entirely internal phenomenon; there would be no pink on your retinal image, in other words (just the red or deep pink lines). Now how might this illusion be explained? Presumably, one brain circuit, specializing in shape, is (mis)led to distinguish a particular bounded region (the ring with its "subjective contours" or the Ehrenstein circle) while another brain circuit, specializing in color but rather poor on shape and location, comes up with a color discrimination (pink #97, let's say) with which to "label" something in the vicinity, and the label gets attached (or "bound") to the whole region.

Figure 5

Why these particular discriminations should occur under these conditions is still controversial, but the controversy concerns the causal mechanisms generating the mislabeling of the region, not the further "products" (if any) of the visual system.

I suspect, however, that some of you will feel dissatisfied with this model of what happens in the neon color spreading effect. It stops short at an explanation that provides a labeled color-by-numbers region: doesn't that recipe for a colored image have to be executed somewhere? Doesn't pink #97 have to be "filled in"? After all, you might be tempted to insist (on seeing the illusion), you see the pink! (You certainly don't see an outlined region with a number written in it.) The pink you see is not in the outside world (it isn't pigment or dye or "colored light"), so it must be "in here"--pink figment, in other words.

Now if you were insisting that you think that somewhere in the brain there is a roughly continuous representation of colored regions--a bit-map--such that "each pixel" in the region has to be labeled "color #97," this would at least be an empirical possibility that we could devise experiments to explore.

The question would be: is there a representational medium here in which the value of some variable parameter (the intensity or whatever that codes for color) has to be transmitted across or replicated across the relevant pixels of an array, or is there just a "single label" of the region, with no further "filling in" or "spreading out" required? That is a good empirical question, and I will not prejudge it. (It would certainly be impressive, for instance, if the color could be shown under some conditions to spread slowly in time--bleeding out from the central red lines and gradually reaching out to the subjective contour boundaries.) But that is an unresolved empirical question about whether the representational medium is captured by something like figure 5 or by something like figure 6. It is not a question about whether there is anything filled in with figment.

Now that we have some different models of filling in to work with, let's go back to our first case, the phi phenomenon with the mid-course color switch. What might "filling in" come to in this case, and what are the alternatives?

First, let's be clear about just how vivid the experience is for subjects. Even on the first trial (i.e., without conditioning), subjects report seeing the color of the moving spot switch in mid-trajectory from red to green--a report sharpened by Kolers' use of a pointer device which subjects retrospectively-but-as-soon-as-possible "superimposed" on the trajectory of the illusory moving spot: such pointer locations had the content: "The spot changed color right about here."(Kolers and von Grünau, 1976, p.330.) In other words, it is not just that the subjects say, retrospectively, that they guess or surmise that the spot must have changed color suddenly somewhere along the path; they claim to recall the color change and they point to the place on the trajectory where it seemed to them to happen.

Doesn't that establish that there has to have been a (roughly) continuous representation of the moving spot, a representation generated by the brain somehow, and somehow interposed between the representations it was "given" by the two stimuli? No, it does not. All it establishes is that soon after the brain discriminates the second stimulus, it arrives at the interpretation that a single object has been in motion, with a color change occurring just about there. (You understood the sentence I just spoke--I didn't have to draw you a picture. The brain doesn't have to draw itself pictures, either, if it can understand its own interpretations!)

Note that I am not saying that there couldn't be some form of

continuous brain-representation of the spot at successive place-times,

as Goodman puts it, but just that there needn't be, on the evidence

so far considered. It doesn't follow from the undeniable fact that

it seems to the subject as if there has been continuous motion of

the spot (with an abrupt midcourse color change). This is a crucial pivot

point in a host of arguments (or should we say, argument-failures). Let's

go over the ground again, more slowly.

1. It definitely does seem to the subject as if there has been

continuous motion of the spot from left to right.

So, on standard materialist assumptions it follows that

2. There has been representation of some sort in the subject's brain

of the spot being (or having been) in continuous motion.

But it does not follow from (2) that

3. there has been continuous (or even roughly continuous) representation

of the motion of the seeming-spot--via a succession of "frames" or something

like that.

the sentence

4. The moon goes round the earth in an elliptical orbit.

is a representation of the moon as being in continuous motion, but it is not itself a continuous or even roughly continuous representation of the moon's motion. You don't need a continuous representation to represent motion as continuous, and, as color-by-numbers shows, you don't need a homogeneous representation to represent a property as homogeneous. In general, you can't read the properties of a representational medium directly off the properties represented by it. But often it is tempting to make this illicit inference.

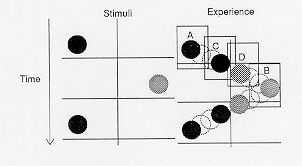

When people learn of the color phi phenomenon, I find that their first impromptu model posits something like a delay loop for the representation of the actual stimuli (the red and green spots), so that the brain has time, in a sort of editing studio somewhere between the eyes and "consciousness," to interpolate a few frames to "fill in" the temporal and spatial gap between the two stimuli (A and B) with some meaningful transition. Goodman succumbs to this temptation in the quoted passage. The idea is that this interpolated material

must be provided by the brain for the eventual presentation to . . .to whom? To the audience in the Cartesian Theater. But this supposed presentation process is entirely gratuitous. Why should the brain bother making frames C and D if, down in the editing studio, it has already arrived at the interpretation of the stimuli as an instance of motion-with-color-change? For whose benefit would all this painting be done? There is no further act of interpretation needed, and indeed, there is no one in the brain to look at frames C and D.Endnote 1

The brain engages in interpretation or discrimination all the time, at all levels, but there is a natural tendency to think of all interpretation as deferred until it can be done "in consciousness" (by the Self, presumably). And if interpretation were so deferred, then it would stand to reason that the interpretations accomplished by the Self would have to be based on something--something "in consciousness." And so we are attracted to the idea of the brain having to provide that basis in some sort of re-presentation (in a relatively uninterpreted format) of the data on which it has already based its interpretations! There is a tendency, in other words, to think of "filling in" as something the brain has to do to provide the Self with the evidence--the "sense data", to speak in antique terms--on which to make judgments.

But the question of whether the brain "fills in" in one way or another is not a question on which introspection by itself can bear, for introspection provides us--the subject as well as the "outside" experimenter--only with the content of representation, not with the features of the representational medium itself. For evidence about that, we need to conduct further experiments.Endnote 2

For some phenomena, we can already be quite sure that the form of representation is a version of color-by-numbers, not a version of bit-mapping. Consider how the brain must deal with wallpaper, for instance. Suppose you walk into a room and notice that the wallpaper is a regular array of hundreds of identical sailboats, or--let's pay homage to Andy--portraits of Marilyn Monroe. We know that you don't foveate, and don't have to foveate, each of the identical images in order to see the wallpaper as hundreds of identical images of Marilyn Monroe. Your foveal vision identifies one or a few of these and somehow your visual system just generalizes--arrives at the conclusion that the rest is "more of the same." We know that the images of Marilyn that never get examined by foveal vision cannot be identified by parafoveal vision--it simply lacks the resolution to distinguish Marilyn from various Marilyn-shaped blobs. Nevertheless, what you see is not wallpaper of Marilyn in the middle surrounded by various indistinct Marilyn-shaped blobs; what you see is wallpaper composed of hundreds of identical Marilyns. Now it is a virtual certainty that nowhere in the brain is there a representation of the wall that has high-resolution bit-maps that reproduce, xerox-wise, the high-resolution image of Marilyn that you have foveated. The brain certainly would not go to the trouble of doing that filling in! Having identified a single Marilyn, and having received no information to the effect that the other blobs are not Marilyns, it jumps to the conclusion that the rest are Marilyns, and labels the whole region "more Marilyns" without any further rendering of Marilyn at all. Of course it does not seem that way to you. It seems to you as if you are actually seeing hundreds of identical Marilyns. And in one sense you are: there are, as I said, hundreds of identical Marilyns out there on the wall, and you're seeing them. What is not the case, however, is that there are hundreds of identical Marilyns represented in your brain. You brain just represents that there are hundreds of identical Marilyns.

Experiments with wallpaper would no doubt show us a lot about the generalization powers of vision. By making small or great variations in the pattern of repetitions, and seeing how gross the failures of generalization have to be before subjects notice, we can learn about the powers of discernment and the conditions of generalization. (In fact, in your youth you already participated in such informal experiments. Recall the picture puzzles in which your task is to discover which two clowns, in the row of apparently identical clowns, are the twins. This is usually a slow and difficult visual task.)

Now we can understand how we can be oblivious to our blindspots even when looking at a plaid surface. The brain doesn't have to "fill in" for the blind spot, since the region in which the blind spot falls is already labeled. If the brain received contradictory evidence from some region, it would abandon or adjust its generalization, but not getting any evidence from the blindspot region is not the same as getting contradictory evidence. The absence of confirming evidence from the blindspot region is no problem for the brain; since the brain has no precedent of getting information from that gap of the retina, it has not developed any epistemically hungry agencies demanding to be fed from that region. Among all the homunculi of vision, not a single one has the role of coordinating information from that region of each eye, so when no information arrives from those sources, no one complains. The area is simply neglected.

In other words, all normally sighted people "suffer" from tiny bit of "anosognosia" --we are (normally) unaware that we are receiving no visual information from our blind spots. The difference between us and sufferers of pathological neglect or other forms of anosognosia is that some of their complainers have been killed. (Kinsbourne, 1980, calls them "cortical analyzers.")

This suggests a fundamental principle, which can be applied more widely to cases of putative filling in. We might call it the Thrifty Producer Principle:

f no one is going to look at it, don't waste effort providing it.Endnote 3The brain's job isn't filling in. The brain's job is finding out.Endnote 4 Once the brain has made a particular discrimination (for instance, of motion, or of the uniformity of color in an area), the task of interpretation is done; no further presentation of the "evidence" on which it is based is required, and hence no further rendering of the evidence for the benefit of the Judge. Applying the Thrifty Producer Principle, we can see that any further representations with special representational properties, would be gratuitous. But it certainly doesn't seem that way "from the first-person point of view." Consider the following spuriously compelling line of reasoning:

1. For something to seem to happen, something else must really happen, namely a real seeming-to-happen must happen.

(So far so good: this is just a tautology, of course.) For example, for something to seem red to somebody, a real seeming-red-to-somebody must happen.

2. But a real seeming-red-to-somebody cannot just consist in somebody judging or thinking that something seems red.

(Why not? Because there is a difference between a subject (merely) judging that something seems red, and something really or actually seeming red to the subject.)

3. So we must posit something extra to be the "immediately apprehended" grounds for the judgment, or perhaps, even, to be the object of the judgment. This something extra is the phenomenon of the occurrence of subjective red, the instantiation of a special (and mysterious) sort of property.

The flaw in this argument comes in step 2. I can understand what somebody would be asserting who said:

"I don't just say that it seems pink to me; it really does seem pink to me!"

This is naturally interpreted as an avowal of sincerity, a denial that one's words on this occasion are merely mouthed, not meant. But the argument above depends on making sense of this variation:

"I don't just think (or judge, or believe) that it seems pink to me; it really seems pink to me!"

What on earth could the difference be? I submit that this speech has no coherent interpretation--or at least, the burden of proof is on those who claim that this is not nonsense.

What is so wrong, finally, with the idea of "filling in"? It suggests that the brain is providing something when in fact the brain is ignoring something; it mistakes the omission of a representation of absence for the representation of presence. And this leads even very sophisticated thinkers to making crashing mistakes. It leads Goodman, Eccles, and Libet, among others, to some bizarre metaphysical speculations on "backwards projection." And it leads Edelman (1990) to make the following claim:

"One of the most striking features of consciousness is its continuity." (p.119)This is utterly wrong. One of the most striking features of consciousness is its discontinuity. Another is its apparent continuity. One makes a big mistake if one attempts to explain its apparent continuity by describing the brain as "filling in" the gaps.

Baars, B., 1988, A Cognitive Theory of Consciousness, Cambridge: Cambridge Univ. Press.

Dennett, D. C., and Kinsbourne, M., in press, "Time and the Observer: the Where and When of Consciousness in the Brain," Behavioral and Brain Sciences.

Eccles, J. C. , 1977, in Popper, K., and Eccles, J.C., The Self and Its Brain, Berlin: Springer Verlag.

Goodman, N., 1978, Ways of Worldmaking, Brighton, Sussex: Harvester.

Hardin, C. L,, 1988, Color for Philosophers: Unweaving the Rainbow, Indianaoplis: Hackett.

Jackendoff, R., 1987, Consciousness and the Computational Mind, Cambridge, MA: MIT Press/A Bradford Book

Kinsbourne, M., 1980, "Brain-based limitations on mind," in R. W. Rieber, ed., Body and Mind: Past, Present and Future, New York: Academic Press, pp.155-75.

Libet, B., 1981, "The experimental evidence for subjective referral of a sensory experience backwards in time: reply to P. S. Churchland," Philosophy of Science, 48, pp.182-97.

Libet, B. 1985, "Subjective Antedating of a Sensory Experience and Mind-Brain Theories: Reply to Honderich (1984)" J. Theoret. Biol. 144, pp.563-570.

Margolis, H., 1987, Patterns, Thinking and Cognition, Chicago: Univ. of Chicago Press.

Minsky, M., 1975, "A Framework for Representing Knowledge," Memo 3306, AI Lab, MIT, Cambridge, MA.

Minsky, M., 1985, The Society of Mind, New York: Simon and Schuster.

van Tuijl, H. F. J. M., 1975, "A New Visual Illusion: Neonlike Color Spreading and Complementary Color Induction between Subjective Contours," Acta Psychologica, 39, pp.441-45

Warren, R. M. 1970, "Perceptual restoration of missing speech sounds," Science, 167, pp392-93.

list of figures:

1.parrot without labels

2.parrot with "color by number" labels

3.parrot as bitmap

*4.van Tuijl neon color-spreading

5.#97 region label

6.#97 as bitmapped region

7.color phi with frames A, B, C, D.

1. In "Time and the Observer: the Where and When of Consciousness in the Brain," (Dennett and Kinsbourne, in press) we show that this delay-loop editing hypothesis is worse than gratuitous; it is contradicted by behavioral evidence about reaction times.

2. For instance, Roger Shepard's initial experiments with the mental rotation of cube diagrams showed that it certainly seemed to subjects that they harbored roughly continuously rotating representations of the shapes they were imagining, but it took further experiments, probing the actual temporal properties of the underlying representations, to provide (partial) confirmation of the hypothesis that they were actually doing what it seemed to them they were doing. (See Shepard, R. N., and Cooper, L. A., Mental Images and Their Transformations, Cambridge, MA: MIT Press/A Bradford Book, 1982.

3. Sometimes, it is less costly for the brain to refrain from filling in. Minsky (1975, 1985) draws attention to the ubiquity of defaults in our representational systems--"Tommy kicked the ball to Billy." --now, was the ball (in your mind) a soccer ball, a beach ball, was it red? This is a genuine form of (informational) filling in, not to be confused with gratuitous rendering needed to "prevent gaps" in the representation.

4. Marcel Kinsbourne first suggested the slogan "Finding out versus filling in" at the ZiF conference on the Phenomenal Mind, May, 1990, Bielefeld.